The current developments in AI haven’t simply introduced extra refined and smarter chatbots however have additionally introduced vital challenges, notably with the rise of deepfakes. Whereas a lot of these AI renders are post-generated, the brand new one takes it a notch with a real-time AI face swap that may be utilized throughout video calls.

A brand new AI mission referred to as Deep-Stay-Cam has not too long ago gained traction on-line for the a part of its characteristic to use deepfakes on webcams. On the identical time, this has sparked discussions concerning the potential safety hazards and moral implications it poses.

How Deep-Stay-Cam is completely different from different deepfake packages

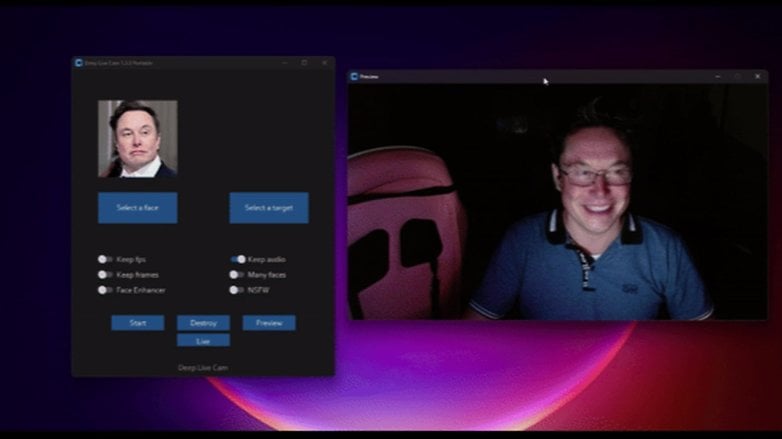

Basically, Deep-Stay-Cam makes use of superior AI algorithms that may take a single supply picture and apply the face to a goal throughout dwell video calls, corresponding to on webcams. Whereas the mission remains to be beneath growth, the preliminary assessments already present each regarding and spectacular outcomes.

As additional described on Ars Technica, the applying first reads and detects faces from a supply and a goal topic. It then makes use of an inswapper mannequin to swap the faces in realtime whereas one other mannequin enhances the standard of the faces and provides results that adapt to altering lighting situations and facial expressions. This refined course of ensures that the top product is very sensible and never simply recognizable as a pretend.

For instance, one of many clips shared by a developer confirmed a practical fusion of Tesla’s CEO Elon Musk’s face onto a topic. The deepfake even included an overlay of prescription glasses and the topic’s hair, making it extremely convincing. One other instance proven was one with the face of US vice presidential candidate JD Vance and Meta’s Mark Zuckerberg.

Do you have to fear concerning the rise of AI simulation apps?

So, why is that this particularly regarding? The usage of Deel-Stay-Cam and different real-time deepfake apps raises critical issues about privateness and safety. Think about {that a} image of you might be grabbed from the web and utilized for fraud, deception, and different malicious actions with out your permission.

Proper now, it’s seen that the shortcomings might be addressed in a couple of methods like together with watermarks when utilizing the app and strong detection strategies. The answer can be utilized to different deepfake packages and apps.

The curiosity within the instrument rapidly took it to the checklist of trending initiatives at GitHub.

What are your ideas on real-time AI simulation apps? Do you’ve practices to share on tips on how to defend your self from these potential dangers? We might like to listen to your solutions within the feedback.