In 2017, a gaggle of researchers (from Google and the College of Toronto) launched a brand new technique to deal with pure language processing (NLP) duties. Their revolutionary paper “Attention is All You Need” offered the Transformer mannequin, an structure that has since grow to be the premise of many superior AI techniques at present. The mannequin’s efficiency, scalability, and flexibility have led to its widespread adoption, forming the spine of state-of-the-art fashions like BERT (Bidirectional Encoder Representations) and GPT (Generative Pre-trained Transformers).

Earlier than the Transformer mannequin, most AI fashions that processed language relied closely on a sort of neural community known as a Recurrent Neural Community (RNN) or its improved model, the Lengthy Quick-Time period Reminiscence Community (LSTM). Particularly, issues like language modeling and machine translation (additionally known as sequence transduction). These fashions processed phrases in a sequence, one after the other, from left to proper (or vice versa). Whereas this strategy made sense as a result of phrases in a sentence usually depend upon the earlier phrases, it had some vital drawbacks:

- Gradual to coach: Since RNNs and LSTMs course of one phrase at a time, coaching these fashions on giant datasets was time-consuming.

- Problem with lengthy sentences: These fashions usually struggled to know relationships between phrases that had been far aside in a sentence.

- Restricted parallelization: As a result of the phrases had been processed sequentially, it was laborious to reap the benefits of trendy computing {hardware} that thrives on doing many operations without delay (parallelization).

The Key Concept: Consideration To Structure

The core thought behind the Transformer mannequin is one thing known as “attention.” In easy phrases, consideration helps the mannequin to deal with particular components of a sentence when attempting to know the that means/context of a phrase. Contemplate the sentence, “The car that was parked in the garage is blue.” When you concentrate on the phrase blue,” you naturally deal with the phrase “car” earlier within the sentence as a result of it tells you what’s blue. Machine translation fashions would battle with figuring out whether or not the “blue” was referring to the automobile or the storage. That is what self-attention does: it helps the mannequin deal with the related phrases, regardless of the place they’re within the sentence.

Word that spotlight wasn’t a brand new idea and was already being utilized in tandem with RNNs. Transformer was the primary transduction mannequin that relied solely on consideration, thereby eliminating the necessity for neural networks. This gave the next benefits:

- Parallel processing: Not like RNNs, which course of phrases one after one other, the Transformer can course of all phrases in a sentence on the identical time. This makes coaching a lot sooner.

- Higher understanding of context: Due to the self-attention mechanism, the Transformer can seize relationships between phrases regardless of how far aside they’re in a sentence. That is essential for understanding complicated sentences.

- Scalability: The mannequin will be scaled up simply by including extra layers, permitting it to deal with very giant datasets and complicated duties.

As you possibly can see, the brand new mannequin not solely eliminated all of the disadvantages of neural networks however really improved the efficiency of machine translation as properly!

For the reason that unique paper generally is a little laborious to know, here’s a less complicated rationalization of the mannequin structure described within the paper.

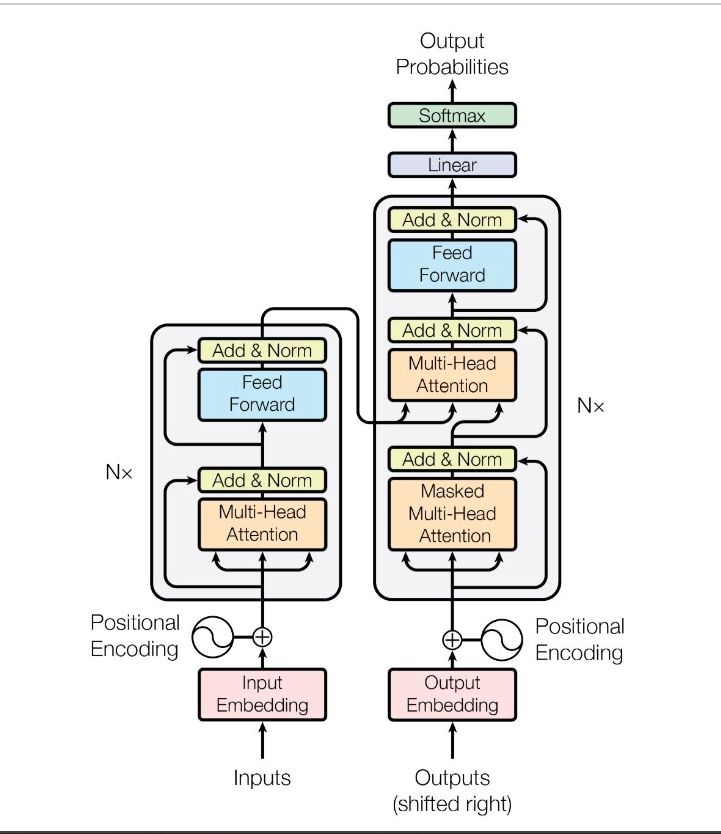

Encoder and Decoder Stacks

The Transformer consists of an encoder stack (on the left) and a decoder stack (on the correct). The encoder stack converts the enter sequence (like a sentence) right into a set of steady representations, whereas the decoder stack converts these representations into an output sequence (like a translation). For every stack, going from backside to prime, listed below are the core parts of the mannequin defined with an instance.

1. Enter Sentence Processing within the Encoder

- Inputs: The textual content that you simply need to translate; e.g., “The car that was parked in the garage is blue.”

- Enter embedding: Converts phrases into fixed-length numerical representations (vectors) known as embeddings. These embeddings seize the semantic that means of phrases in a means that the mannequin can perceive. From our instance:

- “The” -> [0.9, -0.4, 0.2, …]

- “car” -> [0.5, 0.1, -0.7, …]

- “that” -> [-0.8, 0.2, 0.8, …]

- . . . and equally for every phrase within the above sentence

- Positional encoding: For the reason that mannequin processes the enter embeddings, which have no ordering, it wants a technique to perceive the order of phrases in a sentence. Positional encoding provides this details about the place of every phrase within the sequence to its embedding.

- “The” at place 1 would possibly get adjusted to [0.9 + P1, -0.4 + P1, 0.2 + P1, …], the place P1 represents the positional encoding for the primary place, thus producing a brand new embedding that is distinctive for place P1.

- Self-attention mechanism: As described earlier, this permits the mannequin to deal with completely different phrases in a sentence relying on the context. For every phrase, the self-attention mechanism calculates a rating that represents how a lot focus ought to be given to different phrases when encoding the present phrase.

- For the phrase “car,” the self-attention would possibly decide that “parked,” “garage,” and “blue” are notably related in understanding its context.

- Multi-head consideration: Novel a part of the transformer mannequin. Multi-head consideration is just a number of self-attention layers/operations working in parallel and concatenated linearly.

- As an example, one head would possibly deal with the principle topic (“car”) and its properties (“blue”), whereas one other head would possibly deal with the relative clause (“that was parked in the garage”).

- The multi-head consideration module provides the mannequin the potential to know that “blue” is extra related to the automobile in comparison with the storage.

- Feed-forward neural networks: After the self-attention layer, the output is handed via a feed-forward neural community which is utilized to every place individually and identically (as soon as once more, will be run in parallel!). This consists of two linear transformations with a ReLU activation in between.

- Add and norm: Residual connections (add) are used so as to add the enter of a layer to its output, which is then normalized (norm). This helps with coaching deep networks by stopping gradients from vanishing or exploding.

2. Producing the Translation within the Decoder

In NLP, it’s normal to indicate the beginning of the start-of-sequence token with the particular character

- Enter to the decoder: The decoder begins with the encoded illustration of the English sentence from the encoder. When you discover, the decoder additionally takes its personal output as enter. Because it will not have an enter for the preliminary phrase, we insert

- Masked self-attention: Within the decoder, a masked self-attention mechanism ensures that every phrase within the output sequence can solely attend to phrases earlier than it. This prevents the mannequin from wanting forward and ensures it generates the interpretation one phrase at a time from left to proper.

For instance, when the decoder is about to generate the phrase “La” (the primary phrase in French), it solely is aware of the context from

- Feed-forward neural networks: The decoder applies one other feed-forward neural community to course of this data additional, refining the interpretation step-by-step.

Within the decoder, after processing the enter sentence via a number of layers of masked self-attention, encoder-decoder consideration, and feed-forward networks, we acquire a sequence of steady representations (vector of floats) for every place within the goal sentence (French in our case). These representations have to be transformed into precise phrases. That is the place the ultimate linear and softmax layer comes into play.

- Linear layer: This layer is a completely linked neural community layer that transforms the output of the final decoder layer (a dense vector illustration for every place) right into a vector the dimensions of the goal vocabulary (all attainable phrases within the French language, for instance).

- Softmax layer: After the linear transformation, a softmax operate is utilized to transform these logits (uncooked scores) into chances. These chances point out the probability of every phrase within the goal vocabulary being the right subsequent phrase within the translation. This permits us to guess which phrase from the French vocabulary ought to be chosen (the cell with the best likelihood).

The decoder primarily does this:

- First step: The decoder begins with

- Second step: With the enter

La - Third step: The decoder takes

La Voiture - Persevering with course of: This course of continues, producing “était,” “garée,” “dans,” “le,” “garage,” “est,” and eventually, “bleue.”

- Finish-of-sequence token: The decoder finally generates an end-of-sequence token

Remaining Ideas

By combining these steps, the Transformer can perceive your complete sentence’s construction and that means extra successfully than earlier fashions. The self-attention mechanism and parallel processing allow the Transformer to successfully seize the nuances and construction of each the supply and goal languages, making it extremely proficient at duties like machine translation.