In the present day, a number of vital and safety-critical choices are being made by deep neural networks. These embrace driving choices in autonomous automobiles, diagnosing illnesses, and working robots in manufacturing and building. In all such circumstances, scientists and engineers declare that these fashions assist make higher choices than people and therefore, assist save lives. Nonetheless, how these networks attain their choices is usually a thriller, for not simply their customers, but additionally for his or her builders.

These altering instances, thus, necessitate that as engineers we spend extra time unboxing these black packing containers in order that we will determine the biases and weaknesses of the fashions that we construct. This may increasingly additionally permit us to determine which a part of the enter is most important for the mannequin and therefore, guarantee its correctness. Lastly, explaining how fashions make their choices won’t solely construct belief between AI merchandise and their shoppers but additionally assist meet the varied and evolving regulatory necessities.

The entire discipline of explainable AI is devoted to determining the decision-making strategy of fashions. On this article, I want to focus on among the outstanding rationalization strategies for understanding how pc imaginative and prescient fashions arrive at a call. These methods may also be used to debug fashions or to research the significance of various parts of the mannequin.

The most typical option to perceive mannequin predictions is to visualise warmth maps of layers near the prediction layer. These warmth maps when projected on the picture permit us to know which components of the picture contribute extra to the mannequin’s determination. Warmth maps will be generated both utilizing gradient-based strategies like CAM, or Grad-CAM or perturbation-based strategies like I-GOS or I-GOS++. A bridge between these two approaches, Rating-CAM, makes use of the rise in mannequin confidence scores to offer a extra intuitive means of producing warmth maps. In distinction to those methods, one other class of papers argues that these fashions are too advanced for us to count on only a single rationalization for his or her determination. Most vital amongst these papers is the Structured Consideration Graphs methodology which generates a tree to offer a number of potential explanations for a mannequin to achieve its determination.

Class Activation Map (CAM) Primarily based Approaches

1. CAM

Class Activation Map (CAM) is a way for explaining the decision-making of particular varieties of picture classification fashions. Such fashions have their last layers consisting of a convolutional layer adopted by world common pooling, and a completely related layer to foretell the category confidence scores. This system identifies the essential areas of the picture by taking a weighted linear mixture of the activation maps of the ultimate convolutional layer. The load of every channel comes from its related weight within the following absolutely related layer. It is fairly a easy approach however since it really works for a really particular architectural design, its utility is restricted. Mathematically, the CAM method for a selected class c will be written as:

the place is the burden for activation map (A) of the kth channel of the convolutional layer.

is the burden for activation map (A) of the kth channel of the convolutional layer.

ReLU is used as solely optimistic contributions of the activation maps are of curiosity for producing the warmth map.

2. Grad-CAM

The subsequent step in CAM evolution got here by way of Grad-CAM, which generalized the CAM method to a greater variety of CNN architectures. As an alternative of utilizing the weights of the final absolutely related layer, it determines the gradient flowing into the final convolutional layer and makes use of that as its weight. So for the convolutional layer of curiosity A, and a selected class c, they compute the gradient of the rating for sophistication c with respect to the function map activations of a convolutional layer. Then, this gradient is the worldwide common pooled to acquire the weights for the activation map.

The ultimate obtained warmth map is of the identical form because the function map output of that layer, so it may be fairly coarse. Grad-CAM maps develop into progressively worse as we transfer to extra preliminary layers attributable to lowering receptive fields of the preliminary layers. Additionally, gradient-based strategies endure from vanishing gradients because of the saturation of sigmoid layers or zero-gradient areas of the ReLU operate.

3. Rating-CAM

Rating-CAM addresses a few of these shortcomings of Grad-CAM through the use of Channel-wise Enhance of Confidence (CIC) as the burden for the activation maps. Because it doesn’t use gradients, all gradient-related shortcomings are eradicated. Channel-wise Enhance of Confidence is computed by following the steps under:

- Upsampling the channel activation maps to enter measurement after which, normalizing them

- Then, computing the pixel-wise product of the normalized maps and the enter picture

- Adopted by taking the distinction of the mannequin output for the above enter tensors and a few base photos which supplies a rise in confidence

- Lastly, making use of softmax to normalize the activation maps weights to [0, 1]

The Rating-CAM method will be utilized to any layer of the mannequin and gives one of the crucial affordable warmth maps among the many CAM approaches.

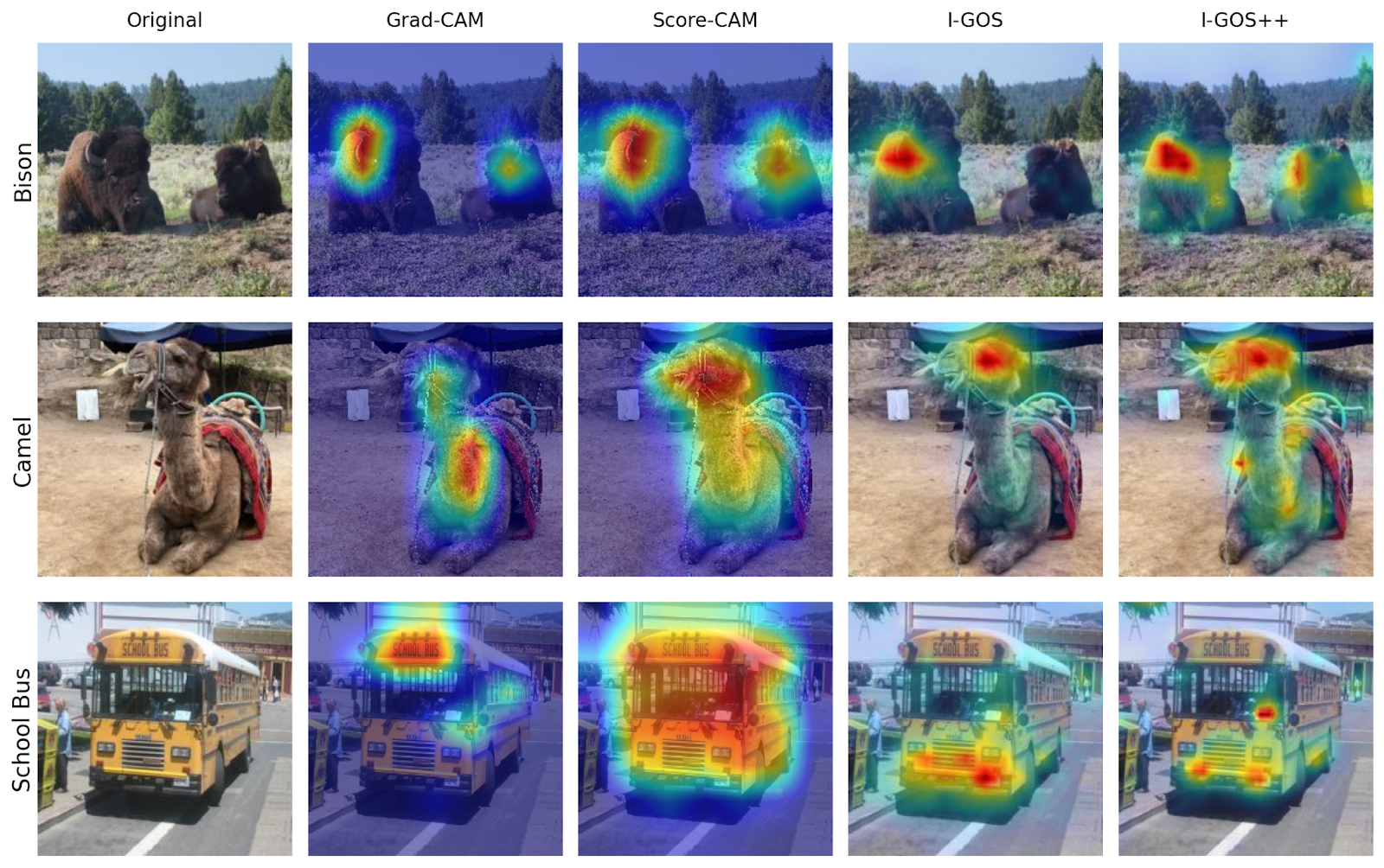

In an effort to illustrate the warmth maps generated by Grad-CAM and Rating-CAM approaches, I chosen three photos: bison, camel, and college bus photos. For the mannequin, I used the Convnext-Tiny implementation in TorchVision. I prolonged the PyTorch Grad-CAM repo to generate warmth maps for the layer convnext_tiny.options[7][2].block[5]. From the visualization under, one can observe that Grad-CAM and Rating-CAM spotlight related areas for the bison picture. Nonetheless, Rating-CAM’s warmth map appears to be extra intuitive for the camel and college bus examples.

Perturbation-Primarily based Approaches

Perturbation-based approaches work by masking a part of the enter picture after which observing how this impacts the mannequin’s efficiency. These methods straight remedy an optimization drawback to find out the masks that may finest clarify the mannequin’s conduct. I-GOS and I-GOS++ are the most well-liked methods underneath this class.

1. Built-in Gradients Optimized Saliency (I-GOS)

The I-GOS paper generates a warmth map by discovering the smallest and smoothest masks that optimizes for the deletion metric. This includes figuring out a masks such that if the masked parts of the picture are eliminated, the mannequin’s prediction confidence will likely be considerably decreased. Thus, the masked area is essential for the mannequin’s decision-making.

The masks in I-GOS is obtained by discovering an answer to an optimization drawback. One option to remedy this optimization drawback is by making use of standard gradients within the gradient descent algorithm. Nonetheless, such a way will be very time-consuming and is liable to getting caught in native optima. Thus, as a substitute of utilizing standard gradients, the authors advocate utilizing built-in gradients to offer a greater descent course. Built-in gradients are calculated by going from a baseline picture (giving very low confidence in mannequin outputs) to the unique picture and accumulating gradients on photos alongside this line.

2. I-GOS++

I-GOS++ extends I-GOS by additionally optimizing for the insertion metric. This metric implies that solely conserving the highlighted parts of the warmth map ought to be enough for the mannequin to retain confidence in its determination. The primary argument for incorporating insertion masks is to stop adversarial masks which don’t clarify the mannequin conduct however are excellent at deletion metrics. In truth, I-GOS++ tries to optimize for 3 masks: a deletion masks, an insertion masks, and a mixed masks. The mixed masks is the dot product of the insertion and deletion masks and is the output of the I-GOS++ approach. This system additionally provides regularization to make masks clean on picture areas with related colours, thus enabling the era of higher high-resolution warmth maps.

Subsequent, we examine the warmth maps of I-GOS and I-GOS++ with Grad-CAM and Rating-CAM approaches. For this, I made use of the I-GOS++ repo to generate warmth maps for the Convnext-Tiny mannequin for the bison, camel, and college bus examples used above. One can discover within the visualization under that the perturbation methods present much less subtle warmth maps in comparison with the CAM approaches. Specifically, I-GOS++ gives very exact warmth maps.

Structured Consideration Graphs for Picture Classification

Structured Consideration Graphs for Picture Classification

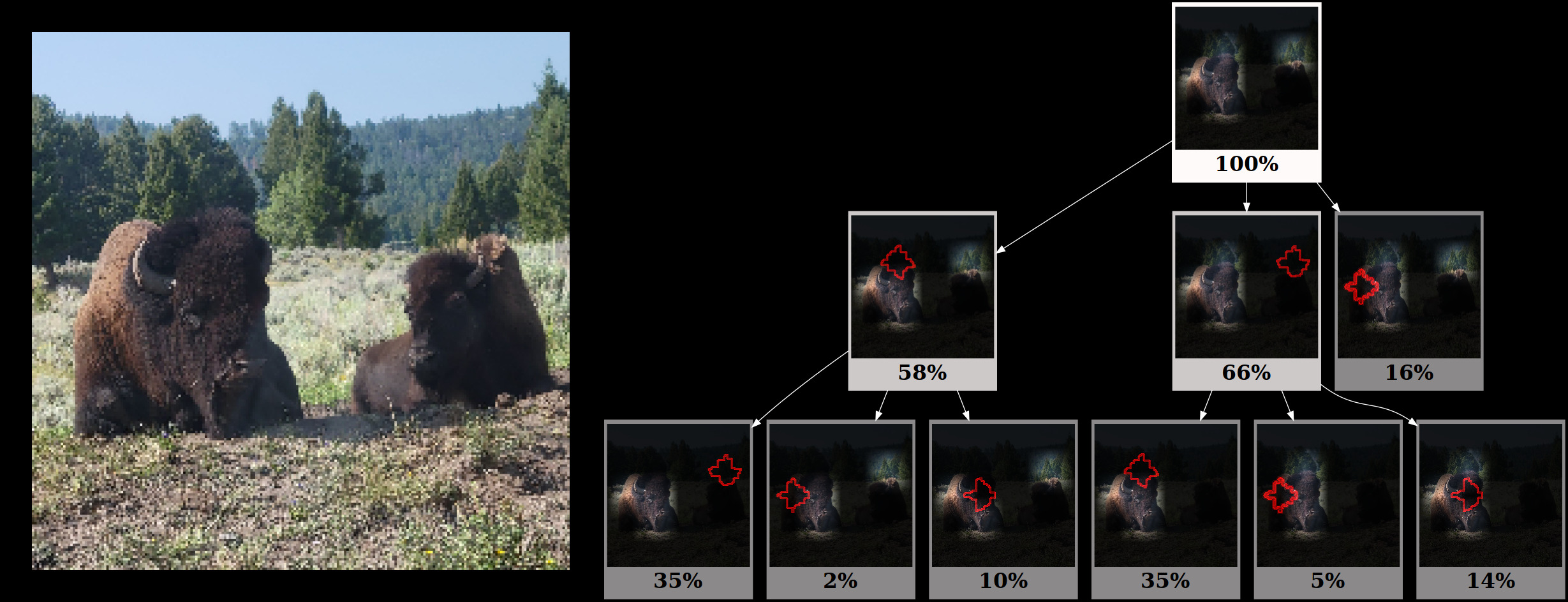

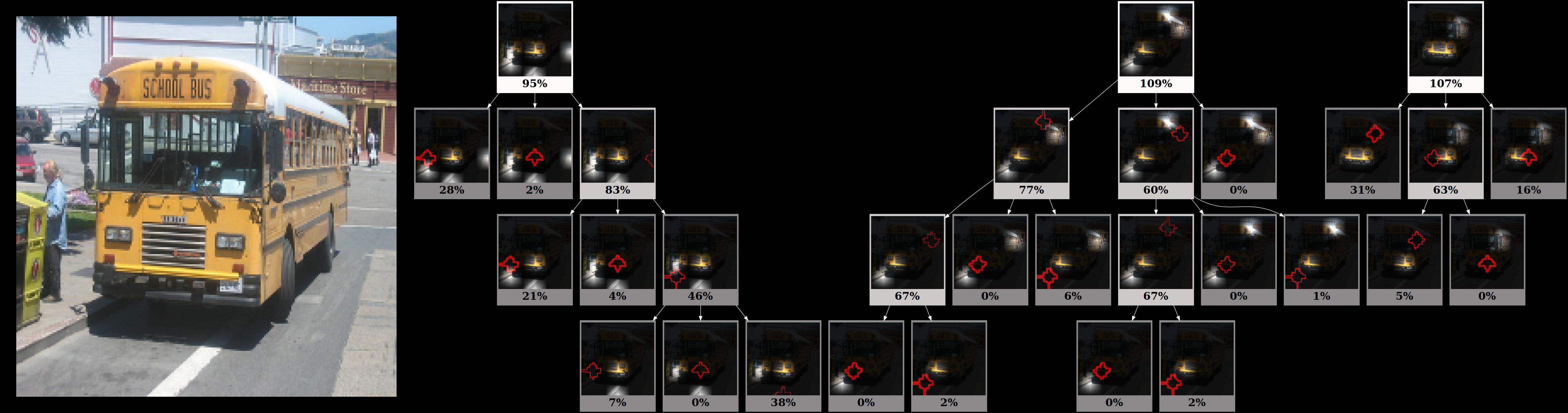

The Structured Consideration Graphs (SAG) paper presents a counter view {that a} single rationalization (warmth map) isn’t enough to elucidate a mannequin’s decision-making. Quite a number of potential explanations exist which might additionally clarify the mannequin’s determination equally properly. Thus, the authors recommend utilizing beam-search to search out all such potential explanations after which utilizing SAGs to concisely current this data for simpler evaluation. SAGs are mainly “directed acyclic graphs” the place every node is a picture patch and every edge represents a subset relationship. Every subset is obtained by eradicating one patch from the foundation node’s picture. Every root node represents one of many potential explanations for the mannequin’s determination.

To construct the SAG, we have to remedy a subset choice drawback to determine a various set of candidates that may function the foundation nodes. The kid nodes are obtained by recursively eradicating one patch from the father or mother node. Then, the scores for every node are obtained by passing the picture represented by that node by way of the mannequin. Nodes under a sure threshold (40%) aren’t expanded additional. This results in a significant and concise illustration of the mannequin’s decision-making course of. Nonetheless, the SAG method is restricted to solely coarser representations as combinatorial search may be very computationally costly.

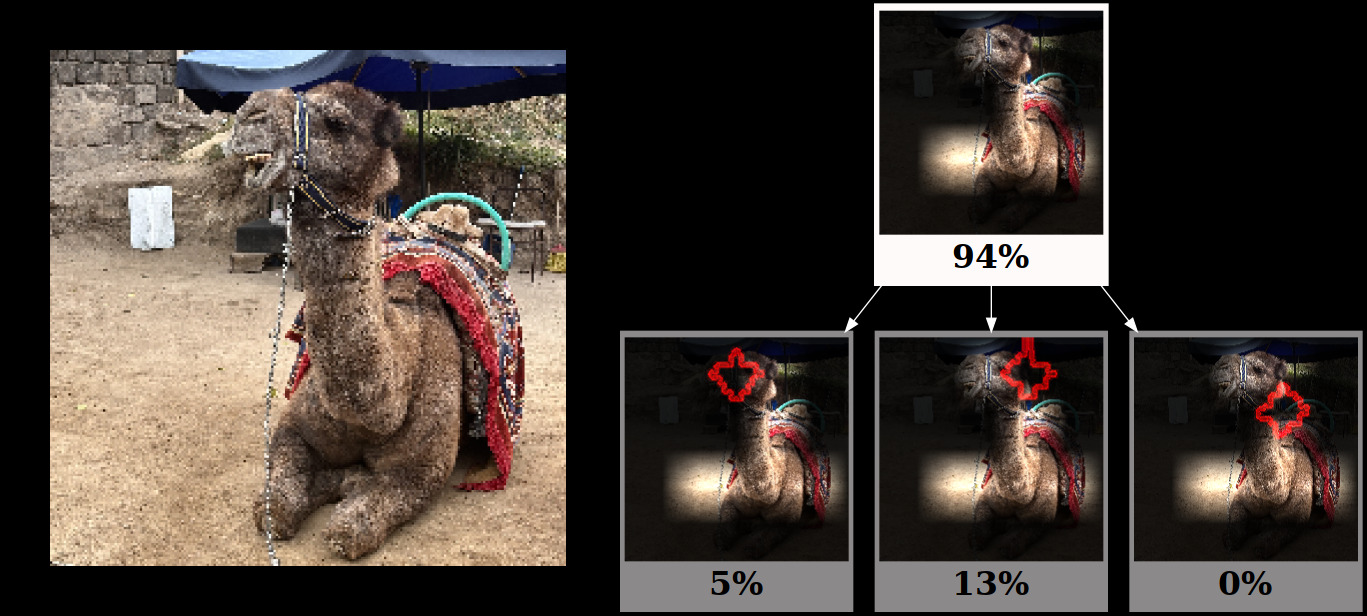

Some illustrations for Structured Consideration Graphs are supplied under utilizing the SAG GitHub repo. For the bison and camel examples for the Convnext-Tiny mannequin, we solely get one rationalization; however for the college bus instance, we get 3 unbiased explanations.

Functions of Clarification Strategies

Mannequin Debugging

The I-GOS++ paper presents an attention-grabbing case examine substantiating the necessity for mannequin explainability. The mannequin on this examine was educated to detect COVID-19 circumstances utilizing chest x-ray photos. Nonetheless, utilizing the I-GOS++ approach, the authors found a bug within the decision-making strategy of the mannequin. The mannequin was paying consideration not solely to the world within the lungs but additionally to the textual content written on X-ray photos. Clearly, the textual content shouldn’t have been thought-about by the mannequin, indicating a potential case of overfitting. To alleviate this subject, the authors pre-processed the photographs to take away the textual content and this improved the efficiency of the unique prognosis activity. Thus, a mannequin explainability approach, IGOS++ helped debug a essential mannequin.

Understanding Choice-Making Mechanisms of CNNs and Transformers

Jiang et. al. of their CVPR 2024 paper, deployed SAG, I-GOS++, and Rating-CAM methods to know the decision-making mechanism of the most well-liked varieties of networks: Convolutional Neural Networks (CNNs) and Transformers. This paper utilized rationalization strategies on a dataset foundation as a substitute of a single picture and gathered statistics to elucidate the decision-making of those fashions. Utilizing this method, they discovered that Transformers have the power to make use of a number of components of a picture to achieve their choices in distinction to CNNs which use a number of disjoint smaller units of patches of photos to achieve their determination.

Key Takeaways

- A number of warmth map methods like Grad-CAM, Rating-CAM, IGOS, and IGOS++ can be utilized to generate visualizations to know which components of the picture a mannequin focuses on when making its choices.

- Structured Consideration Graphs present an alternate visualization to offer a number of potential explanations for the mannequin’s confidence in its predicted class.

- Clarification methods can be utilized to debug the fashions and also can assist higher perceive mannequin architectures.