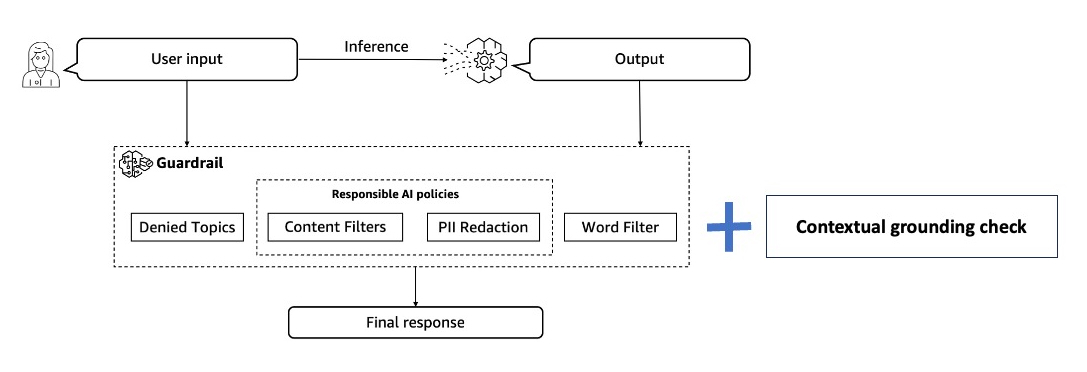

Guardrails for Amazon Bedrock lets you implement safeguards to your generative AI functions primarily based in your use circumstances and accountable AI insurance policies. You’ll be able to create a number of guardrails tailor-made to totally different use circumstances and apply them throughout a number of basis fashions (FM), offering a constant consumer expertise and standardizing security and privateness controls throughout generative AI functions.

Till now, Guardrails supported 4 insurance policies — denied matters, content material filters, delicate info filters, and phrase filters. The Contextual grounding verify coverage (the newest one added on the time of writing) can detect and filter hallucination in mannequin responses which are not grounded in enterprise knowledge or are irrelevant to the customers’ question.

Contextual Grounding To Stop Hallucinations

The generative AI functions that we construct rely on LLMs to offer correct responses. This is perhaps primarily based on LLM inherent capabilities or utilizing strategies resembling RAG (Retrieval Augmented Era). Nevertheless, it is a identified proven fact that LLMs are vulnerable to hallucination and might find yourself responding with inaccurate info which impacts software reliability.

The Contextual grounding verify coverage evaluates hallucinations utilizing two parameters:

- Grounding — This checks if the mannequin response is factually correct primarily based on the supply and is grounded within the supply. Any new info launched within the response might be thought of un-grounded.

- Relevance — This checks if the mannequin response is related to the consumer question.

Rating Primarily based Analysis

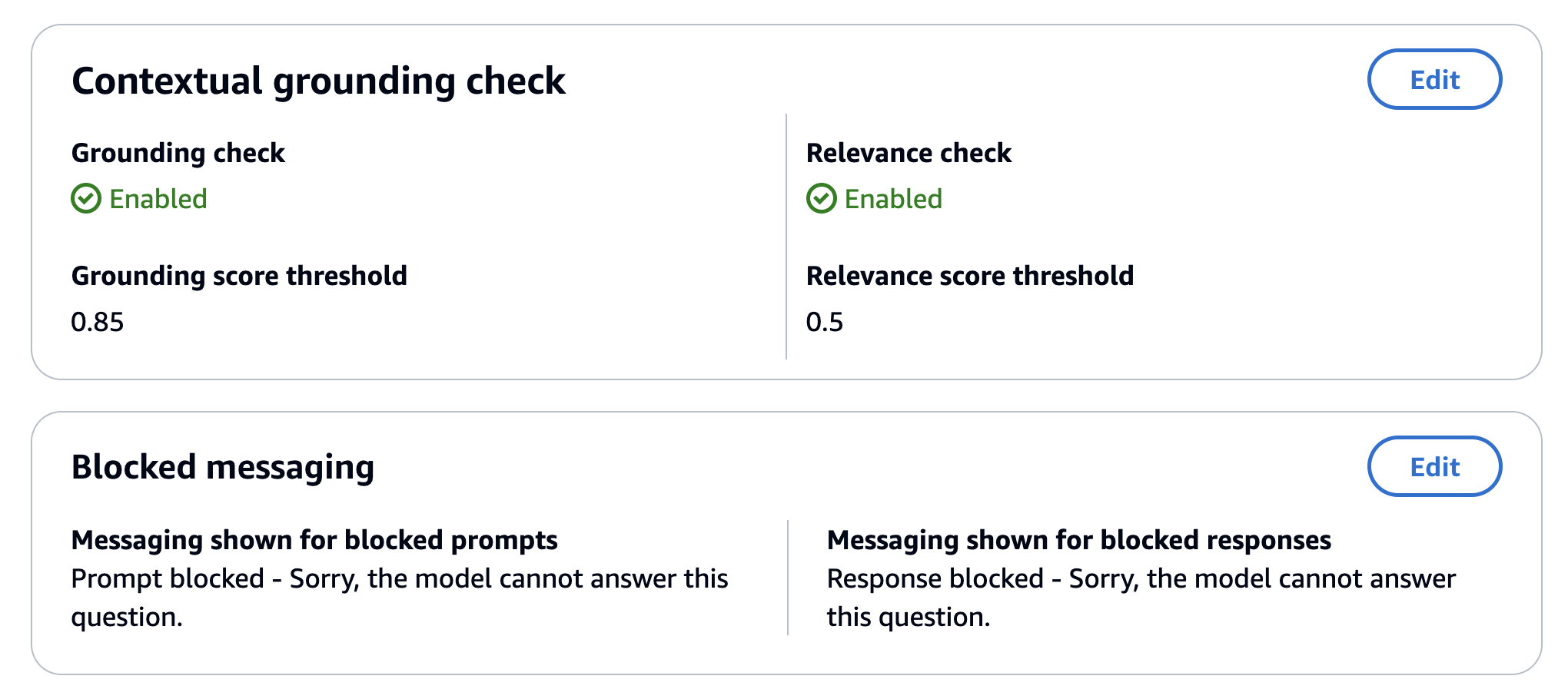

The results of the contextual grounding verify is a set of confidence scores similar to grounding and relevance for every mannequin response processed primarily based on the supply and consumer question supplied. You’ll be able to configure thresholds to filter (block) mannequin responses primarily based on the generated scores. These thresholds decide the minimal confidence rating for the mannequin response to be thought of grounded and related.

For instance, in case your grounding threshold and relevance threshold are every set at 0.6, all mannequin responses with a grounding or relevance rating of lower than that might be detected as hallucinations and blocked.

You might want to regulate the brink scores primarily based on the accuracy tolerance to your particular use case. For instance, a customer-facing software within the finance area may have a excessive threshold because of decrease tolerance for inaccurate content material. Needless to say the next threshold for the grounding and relevance scores will lead to extra responses being blocked.

Getting Began With Contextual Grounding

To get an understanding of how contextual grounding checks work, I’d suggest utilizing the Amazon Bedrock Console because it makes it simple to check your Guardrail insurance policies with totally different combos of supply knowledge and prompts.

Begin by making a Guardrails configuration. For this instance, I’ve set the grounding verify threshold to, relevance rating threshold to 0.5 and configured the messages for blocked prompts and responses:

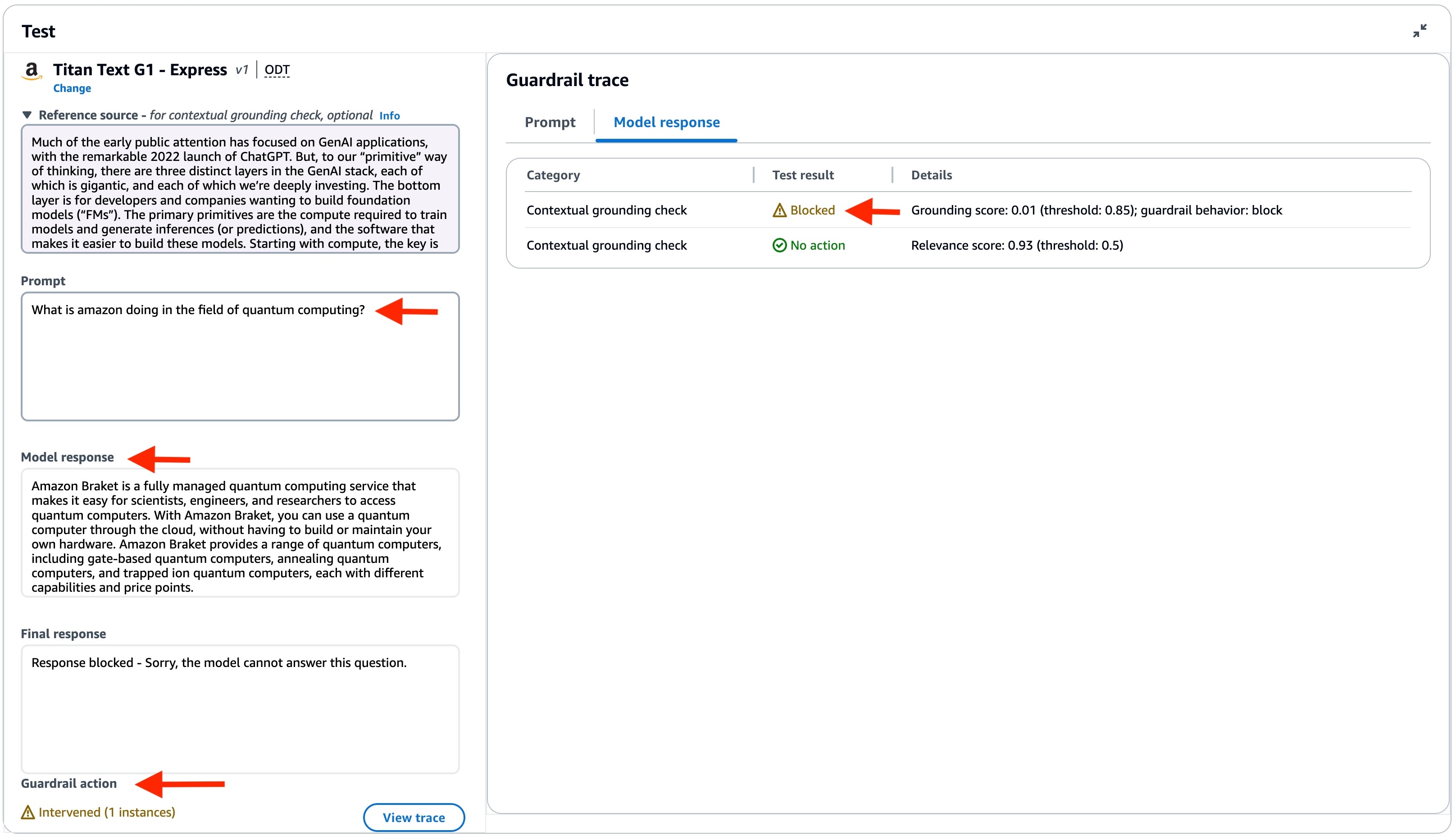

For instance, I used this snippet of textual content from the 2023 Amazon shareholder letter PDF and used it because the Reference supply. For the Immediate, I used: What’s Amazon doing within the subject of quantum computing?

The great half about utilizing the AWS console is that not solely are you able to see the ultimate response (pre-configured within the Guardrail), but additionally the precise mannequin response (that was blocked).

On this case, the mannequin response was related because it it got here again with details about Amazon Braket. However the response was un-grounded because it wasn’t primarily based on the supply info, which had no knowledge about quantum computing, or Amazon Braket. Therefore the grounding rating was 0.01 — a lot decrease than the configured threshold of 0.85, which resulted within the mannequin response getting blocked.

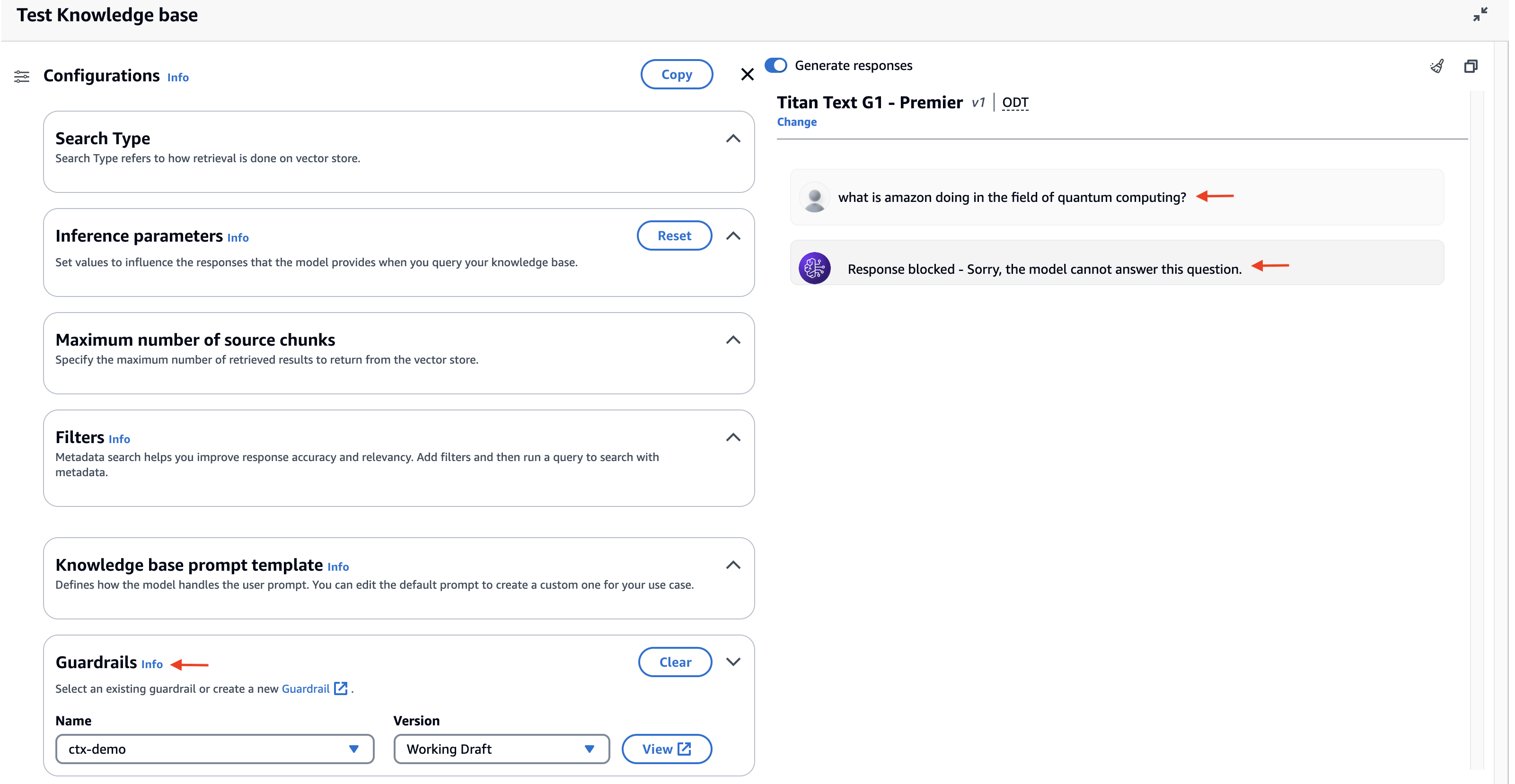

Use Contextual Grounding Test for RAG Functions With Data Bases

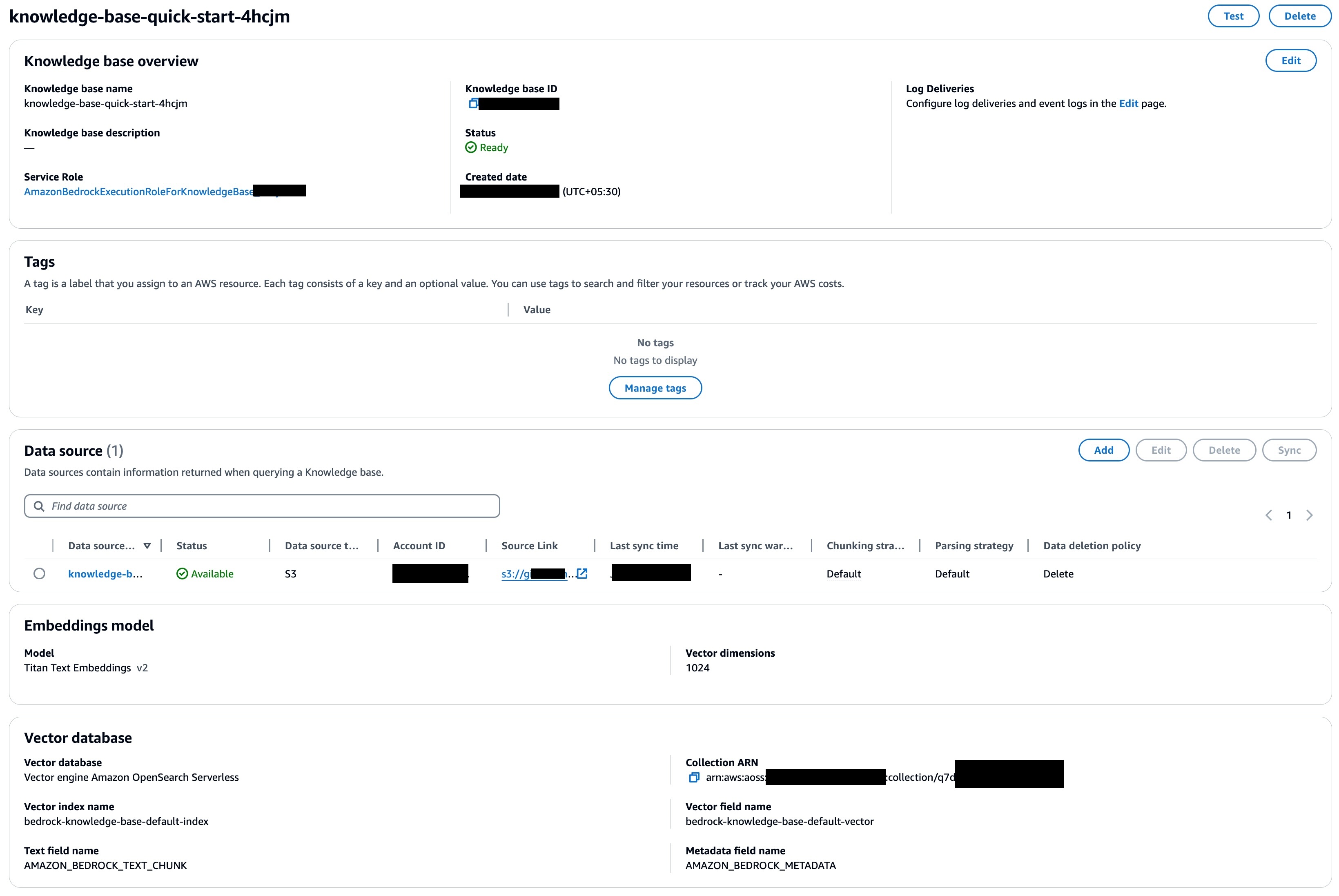

Bear in mind, Contextual grounding verify is yet one more coverage and it may be leveraged wherever Guardrails can be utilized. One of many key use circumstances is combining it with RAG functions constructed with Data Bases for Amazon Bedrock.

To do that, create a Data Base. I created it utilizing the 2023 Amazon shareholder letter PDF because the supply knowledge (loaded from Amazon S3) and the default vector database (OpenSearch Serverless assortment).

After the Data Base has been created, sync the information supply, and try to be able to go!

Let’s begin with a query that I do know may be answered precisely: What’s Amazon doing within the subject of generative AI?

This went nicely, as anticipated — we bought a related and grounded response.

Let’s strive one other one: What’s Amazon doing within the subject of quantum computing?

As you’ll be able to see, the mannequin response bought blocked, and the pre-configured response (in Guardrails) was returned as an alternative. It is because the supply knowledge doesn’t truly comprise details about quantum computing (or Amazon Braket), and a hallucinated response was prevented by the Guardrails.

Mix Contextual Grounding Checks With RetrieveAndGenerate API

Let’s transcend the AWS console and see find out how to apply the identical method in a programmatic means.

Right here is an instance utilizing the RetrieveAndGenerate API, which queries a information base and generates responses primarily based on the retrieved outcomes. I’ve used the AWS SDK for Python (boto3), however it’ll work with any of the SDKs.

Earlier than attempting out the instance, ensure you have configured and arrange Amazon Bedrock, together with requesting entry to the Basis Mannequin(s).

import boto3

guardrailId = "ENTER_GUARDRAIL_ID"

guardrailVersion= "ENTER_GUARDRAIL_VERSION"

knowledgeBaseId = "ENTER_KB_ID"

modelArn = 'arn:aws:bedrock:us-east-1::foundation-model/anthropic.claude-instant-v1'

def major():

consumer = boto3.consumer('bedrock-agent-runtime')

response = consumer.retrieve_and_generate(

enter={

'textual content': 'what's amazon doing within the subject of quantum computing?'

},

retrieveAndGenerateConfiguration={

'knowledgeBaseConfiguration': {

'generationConfiguration': {

'guardrailConfiguration': {

'guardrailId': guardrailId,

'guardrailVersion': guardrailVersion

}

},

'knowledgeBaseId': knowledgeBaseId,

'modelArn': modelArn,

'retrievalConfiguration': {

'vectorSearchConfiguration': {

'overrideSearchType': 'SEMANTIC'

}

}

},

'sort': 'KNOWLEDGE_BASE'

},

)

motion = response["guardrailAction"]

print(f'Guardrail motion: {motion}')

finalResponse = response["output"]["text"]

print(f'Last response:n{finalResponse}')

if __name__ == "__main__":

major()You can too confer with the code in this Github repo.

Run the instance (don’t neglect to enter the Guardrail ID, model, Data Base ID):

pip set up boto3

python grounding.pyIt’s best to get an output as such:

Guardrail motion: INTERVENED

Last response:

Response blocked - Sorry, the mannequin can not reply this query.Conclusion

Contextual grounding verify is a straightforward but highly effective approach to enhance response high quality in functions primarily based on RAG, summarization, or info extraction. It might probably assist detect and filter hallucinations in mannequin responses if they don’t seem to be grounded (factually inaccurate or add new info) within the supply info or are irrelevant to the consumer’s question. Contextual grounding verify is made accessible to you as a coverage/configuration in Guardrails for Amazon Bedrock and may be plugged in wherever it’s possible you’ll be utilizing Guardrails to implement accountable AI to your functions.

For extra particulars, confer with the Amazon Bedrock documentation for Contextual grounding.

Completely satisfied constructing!