Mistral AI provides fashions with various traits throughout efficiency, price, and extra:

- Mistral 7B: The primary dense mannequin launched by Mistral AI, excellent for experimentation, customization, and fast iteration

- Mixtral 8x7B: A sparse combination of consultants mannequin

- Mistral Massive: Splendid for advanced duties that require massive reasoning capabilities or are extremely specialised (Artificial Textual content Technology, Code Technology, RAG, or Brokers)

Let’s stroll by means of methods to use these Mistral AI fashions on Amazon Bedrock with Go, and within the course of, additionally get a greater understanding of its immediate tokens.

Getting Began With Mistral AI

Let’s begin off with a easy instance utilizing Mistral 7B.

- Discuss with the “Before You Begin” part on this weblog publish to finish the stipulations for operating the examples. This contains putting in Go, configuring Amazon Bedrock entry, and offering needed IAM permissions.

You’ll be able to check with the whole code right here.

To run the instance:

git clone https://github.com/abhirockzz/mistral-bedrock-go

cd mistral-bedrock-go

go run primary/principal.go

The response might (or might not) be barely completely different in your case:

request payload:

{"prompt":"u003csu003e[INST] Hello, what's your name? [/INST]"}

response payload:

{"outputs":[{"text":" Hello! I don't have a name. I'm just an artificial intelligence designed to help answer questions and provide information. How can I assist you today?","stop_reason":"stop"}]}

response string:

Good day! I haven't got a reputation. I am simply a man-made intelligence designed to assist reply questions and supply info. How can I help you in the present day?

You’ll be able to check with the whole code right here.

We begin by creating the JSON payload – it is modeled as a struct (MistralRequest). Additionally, discover the mannequin ID mistral.mistral-7b-instruct-v0:2

const modelID7BInstruct = "mistral.mistral-7b-instruct-v0:2"

const promptFormat = "[INST] %s [/INST]"

func principal() {

msg := "Hello, what's your name?"

payload := MistralRequest{

Immediate: fmt.Sprintf(promptFormat, msg),

}

//...

Mistral has a particular immediate format, the place:

- Textual content for the person position is contained in the

[INST]...[/INST]tokens. - Textual content outdoors is the assistant position.

Within the output logs above, see how the

Right here is the MistralRequest struct that has the required attributes:

kind MistralRequest struct {

Immediate string `json:"prompt"`

MaxTokens int `json:"max_tokens,omitempty"`

Temperature float64 `json:"temperature,omitempty"`

TopP float64 `json:"top_p,omitempty"`

TopK int `json:"top_k,omitempty"`

StopSequences []string `json:"stop,omitempty"`

}

InvokeModel is used to name the mannequin. The JSON response is transformed to a struct (MistralResponse) and the textual content response is extracted from it.

output, err := brc.InvokeModel(context.Background(), &bedrockruntime.InvokeModelInput{

Physique: payloadBytes,

ModelId: aws.String(modelID7BInstruct),

ContentType: aws.String("application/json"),

})

var resp MistralResponse

err = json.Unmarshal(output.Physique, &resp)

fmt.Println("response string:n", resp.Outputs[0].Textual content)

Chat Instance

Shifting on to a easy conversational interplay: that is what Mistral refers to as a multi-turn immediate and we are going to add the which is the finish of string token.

To run the instance:

go run chat/principal.go

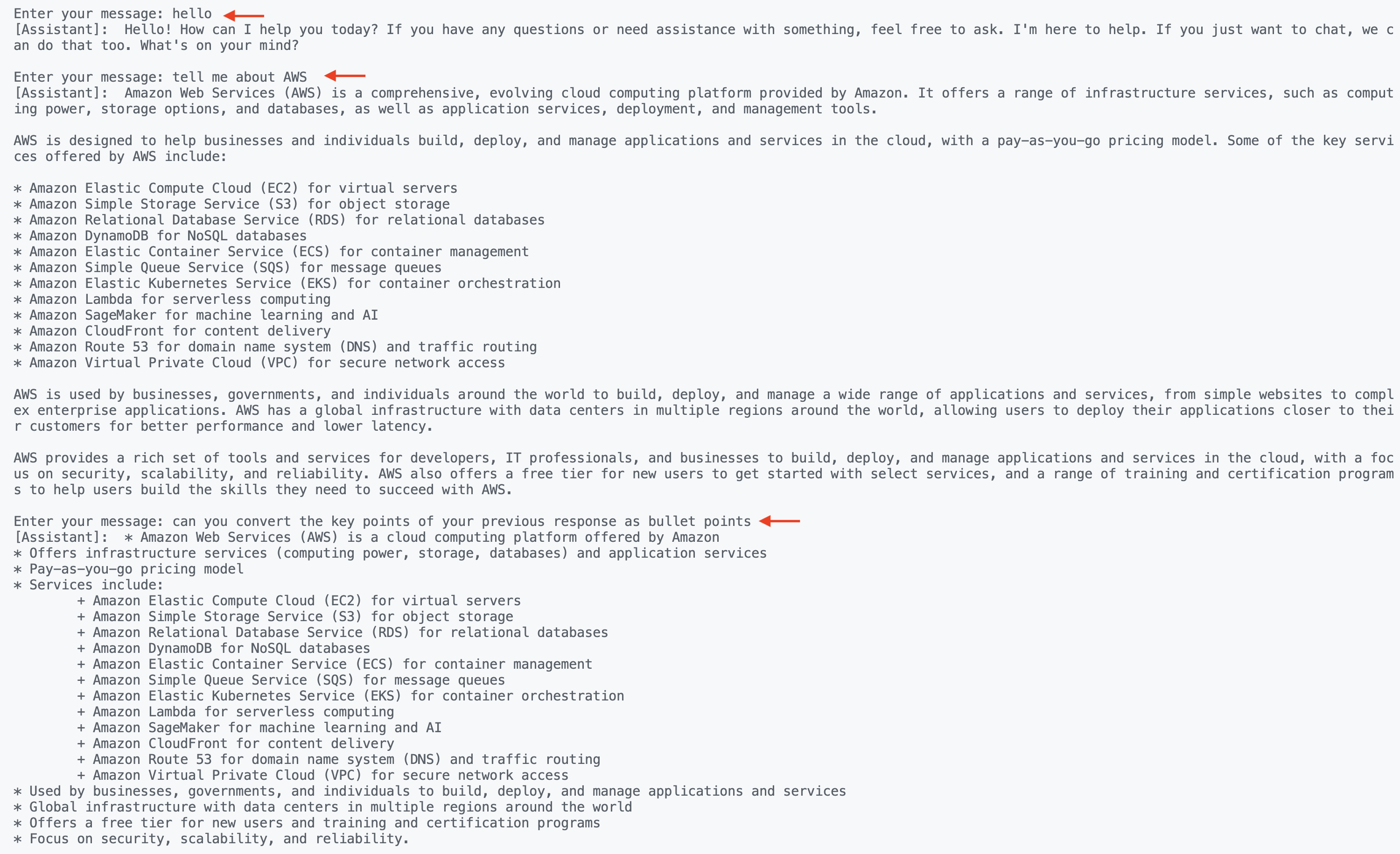

Right here is my interplay:

You’ll be able to check with the whole code right here.

The code itself is overly simplified for the needs of this instance. However, the necessary half is how the tokens are used to format the immediate. Be aware that we’re utilizing Mixtral 8X7B (mistral.mixtral-8x7b-instruct-v0:1) on this instance.

const userMessageFormat = "[INST] %s [/INST]"

const modelID8X7BInstruct = "mistral.mixtral-8x7b-instruct-v0:1"

const bos = ""

const eos = ""

var verbose *bool

func principal() {

reader := bufio.NewReader(os.Stdin)

first := true

var msg string

for {

fmt.Print("nEnter your message: ")

enter, _ := reader.ReadString('n')

enter = strings.TrimSpace(enter)

if first {

msg = bos + fmt.Sprintf(userMessageFormat, enter)

} else {

msg = msg + fmt.Sprintf(userMessageFormat, enter)

}

payload := MistralRequest{

Immediate: msg,

}

response, err := ship(payload)

fmt.Println("[Assistant]:", response)

msg = msg + response + eos + " "

first = false

}

}

The starting of string (bos) token is simply wanted as soon as at the beginning of the dialog, whereas eos (finish of string) marks the tip of a single dialog alternate (person and assistant).

Chat With Streaming

For those who’ve learn my earlier blogs, I all the time like to incorporate a “streaming” instance as a result of:

- It supplies a greater expertise from a consumer utility viewpoint.

- It is a frequent mistake to miss the

InvokeModelWithResponseStreamoperate (the async counterpart ofInvokeModel). - The partial mannequin payload response might be fascinating (and tough at occasions).

You’ll be able to check with the whole code right here.

Let’s do this out. This instance makes use of Mistral Massive – merely change the mannequin ID to mistral.mistral-large-2402-v1:0. To run the instance:

go run chat-streaming/principal.go

Discover the utilization of InvokeModelWithResponseStream (as an alternative of Invoke):

output, err := brc.InvokeModelWithResponseStream(context.Background(), &bedrockruntime.InvokeModelWithResponseStreamInput{

Physique: payloadBytes,

ModelId: aws.String(modelID7BInstruct),

ContentType: aws.String("application/json"),

})

//...

To course of its output, we use:

//...

resp, err := processStreamingOutput(output, func(ctx context.Context, half []byte) error {

fmt.Print(string(half))

return nil

})

Listed below are just a few bits from the processStreamingOutput operate – you’ll be able to verify the code right here. The necessary factor to grasp is how the partial responses are collected collectively to supply the ultimate output (MistralResponse).

func processStreamingOutput(output *bedrockruntime.InvokeModelWithResponseStreamOutput, handler StreamingOutputHandler) (MistralResponse, error) {

var combinedResult string

resp := MistralResponse{}

op := Outputs{}

for occasion := vary output.GetStream().Occasions() {

change v := occasion.(kind) {

case *sorts.ResponseStreamMemberChunk:

var pr MistralResponse

err := json.NewDecoder(bytes.NewReader(v.Worth.Bytes)).Decode(&pr)

if err != nil {

return resp, err

}

handler(context.Background(), []byte(pr.Outputs[0].Textual content))

combinedResult += pr.Outputs[0].Textual content

op.StopReason = pr.Outputs[0].StopReason

//...

}

op.Textual content = combinedResult

resp.Outputs = []Outputs{op}

return resp, nil

}

Conclusion

Bear in mind – constructing AI/ML functions utilizing Massive Language Fashions (like Mistral, Meta Llama, Claude, and so on.) doesn’t suggest that it’s important to use Python. Managed platforms present entry to those highly effective fashions utilizing versatile APIs in a wide range of programming languages, together with Go! With AWS SDK assist, you should use the programming language of your option to combine with Amazon Bedrock and construct generative AI options.

You’ll be able to study extra by exploring the official Mistral documentation in addition to the Amazon Bedrock person information. Completely satisfied constructing!