Due to langchaingo, it is potential to construct composable generative AI purposes utilizing Go. I’ll stroll you thru how I used the code era (and software program improvement basically) capabilities in Amazon Q Developer utilizing VS Code to boost langchaingo.

Let’s get proper to it!

I began by cloning langchaingo, and opened the mission in VS Code:

git clone https://github.com/tmc/langchaingo

code langchaingo

langchaingo has an LLM element that has assist for Amazon Bedrock fashions together with Claude, Titan household, and so on. I wished so as to add assist for an additional mannequin.

Add Titan Textual content Premier Help

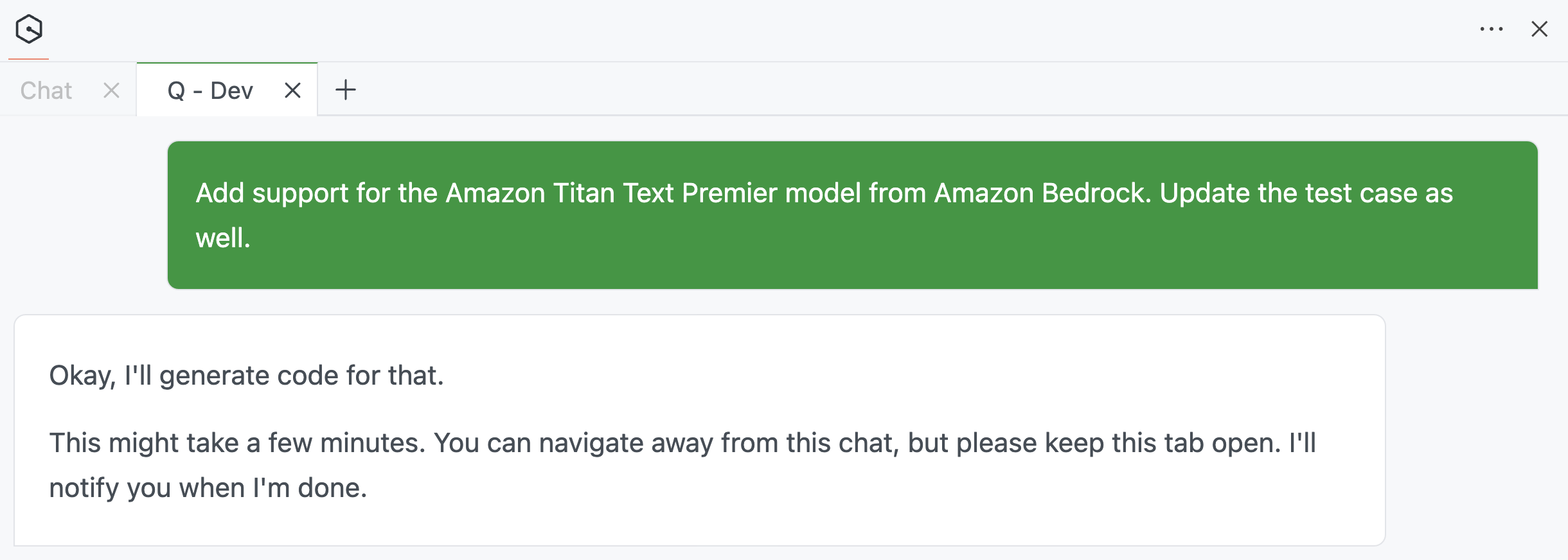

So I began with this immediate: Add assist for the Amazon Titan Textual content Premier mannequin from Amazon Bedrock. Replace the take a look at case as effectively.

Amazon Q Developer kicks off the code era course of…

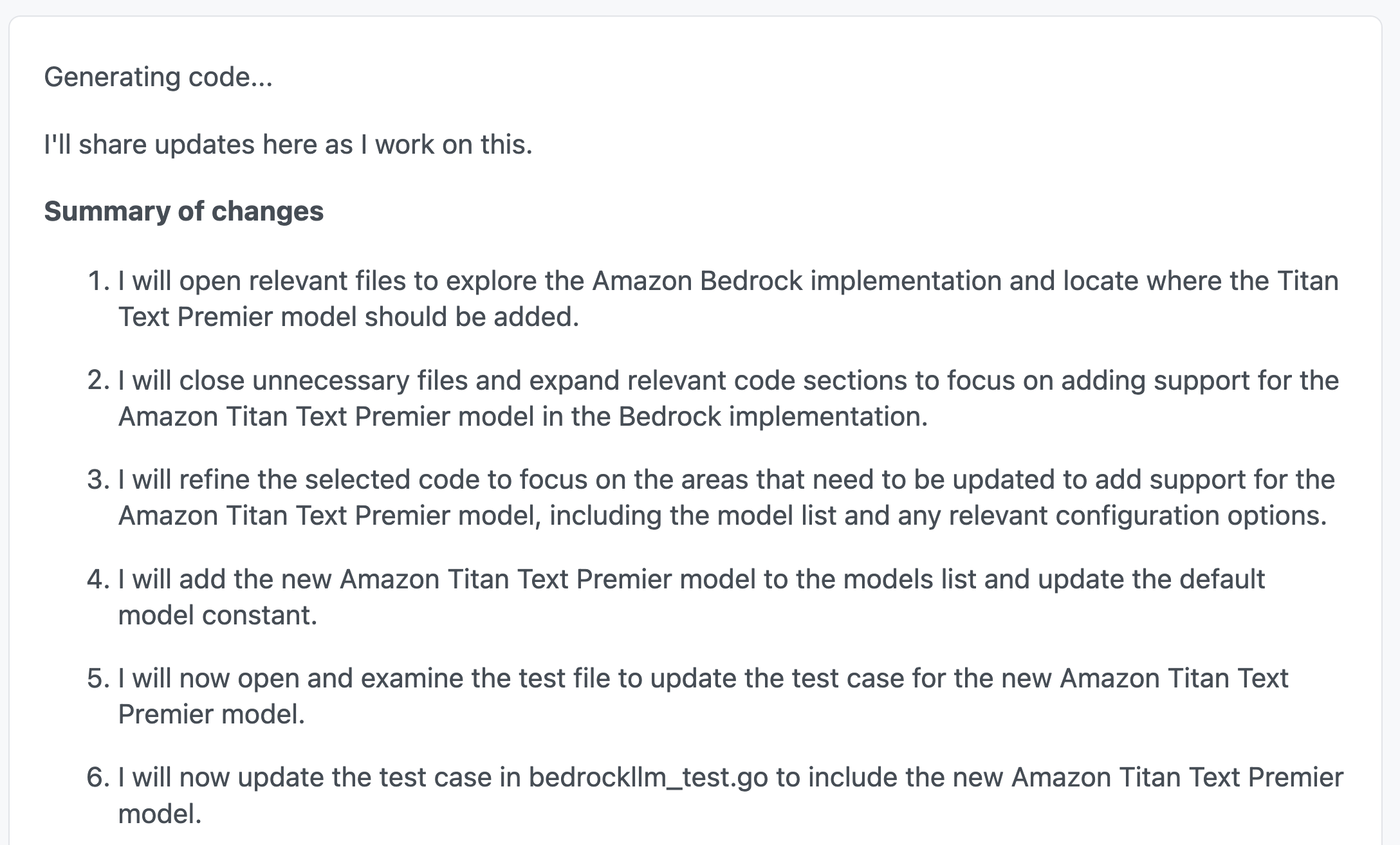

Reasoning

The attention-grabbing half was the way it continuously shared its thought course of (I did not actually must immediate it to do this!). Though it isn’t evident within the screenshot, Amazon Q Developer stored updating its thought course of because it went about its job.

This purchased again (not so fond) reminiscences of Leetcode interviews the place the interviewer has to continuously remind me about being vocal and sharing my thought course of. Nicely, there you go!

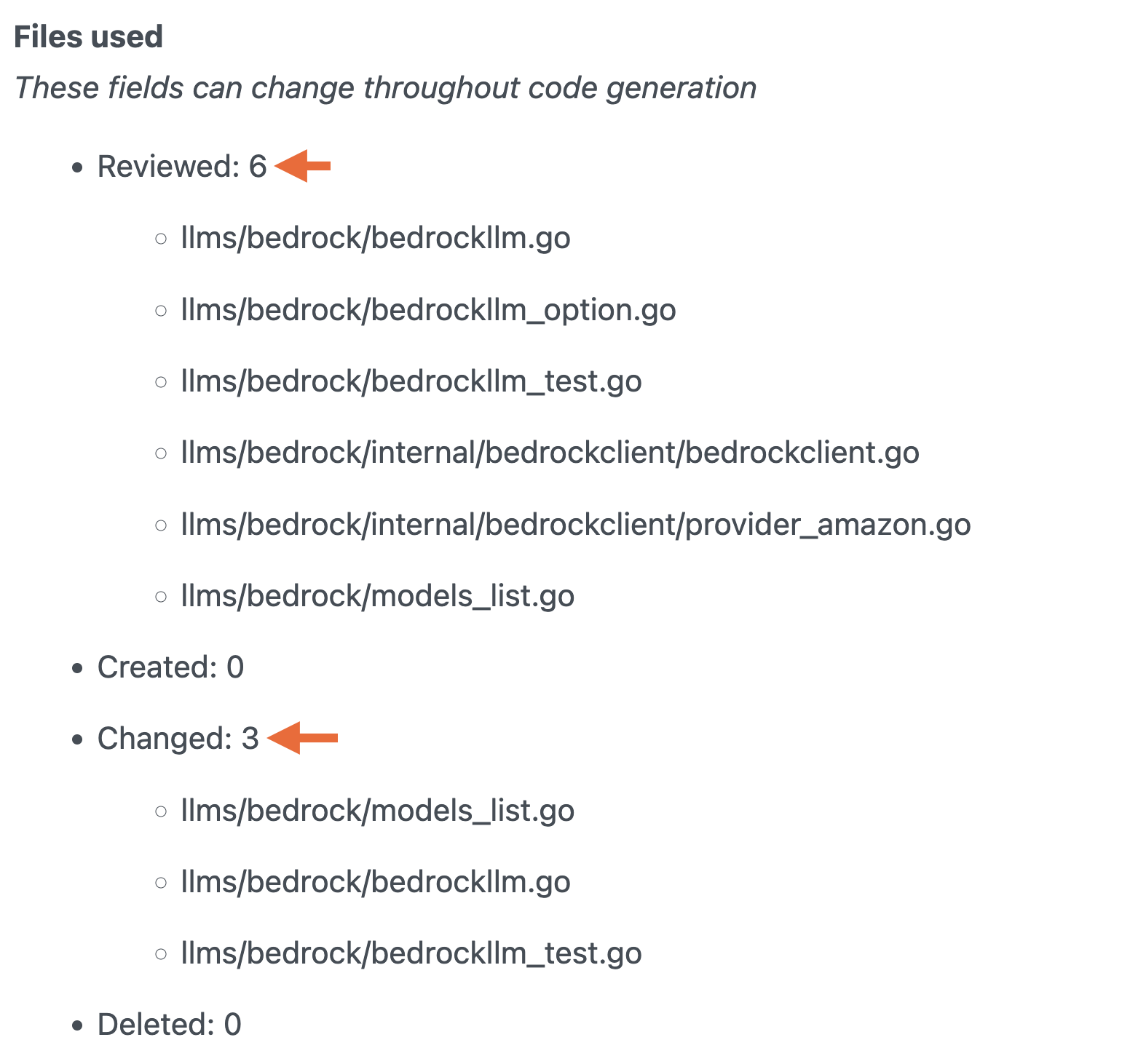

As soon as it is performed, the modifications are clearly listed:

Introspecting the Code Base

It is also tremendous useful to see the information that had been introspected as a part of the method. Keep in mind, Amazon Q Developer makes use of the complete code base as a reference or context — that is tremendous vital. On this case, discover the way it was sensible sufficient to solely probe information associated to the issue assertion.

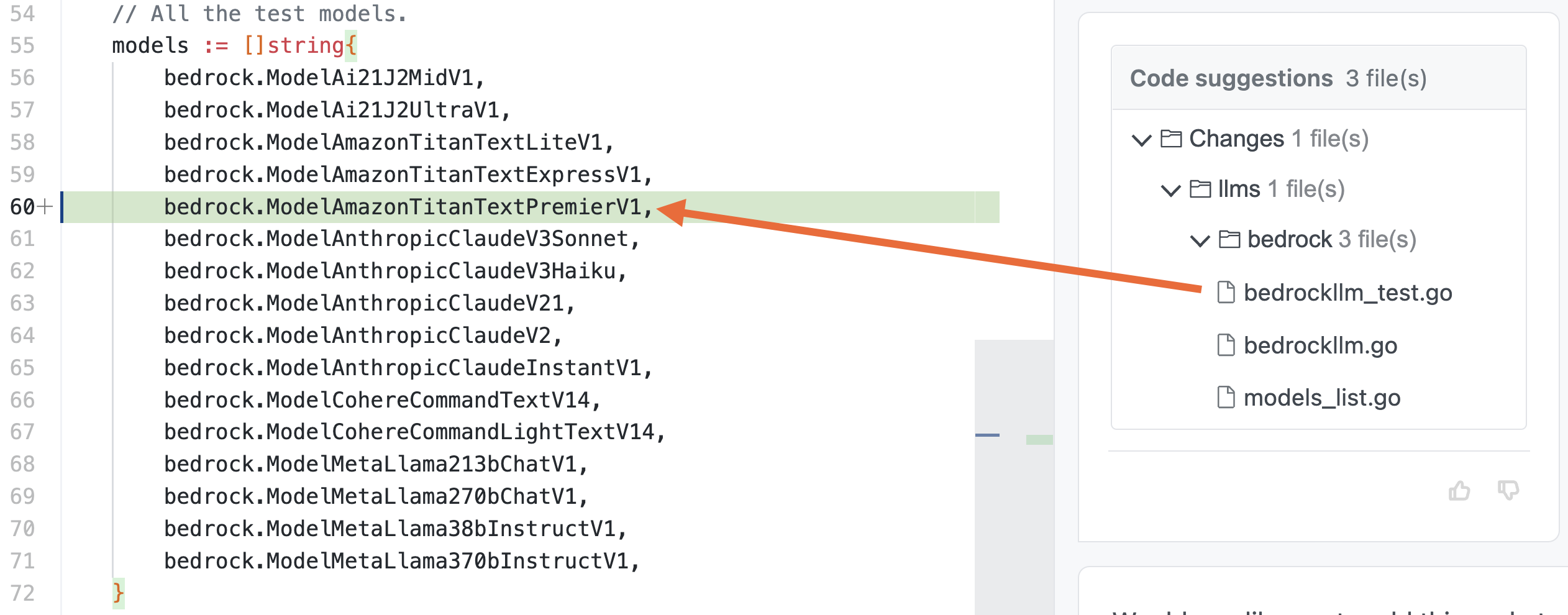

Code Recommendations

Lastly, it got here up with the code replace options, together with a take a look at case. Trying on the outcome, it may appear that this was a simple one. However, for somebody new to the codebase, this may be actually useful.

After accepting the modifications, I executed the take a look at circumstances:

cd llms/bedrock

go take a look at -vAll of them handed!

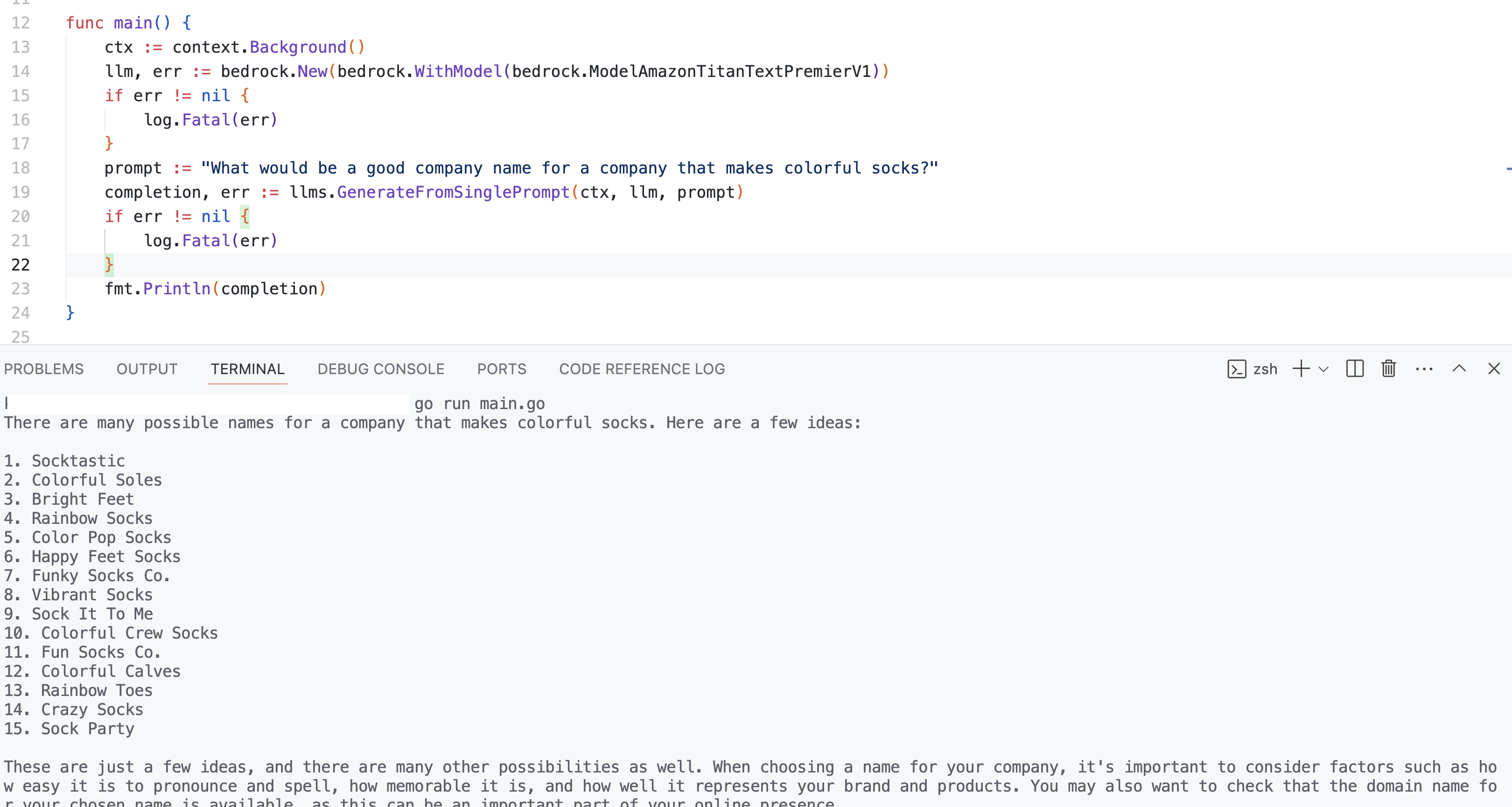

To wrap it up, I additionally tried this from a separate mission. Right here is the code that used the Titan Textual content Premier mannequin (see bedrock.WithModel(bedrock.ModelAmazonTitanTextPremierV1)):

package deal primary

import (

"context"

"fmt"

"log"

"github.com/tmc/langchaingo/llms"

"github.com/tmc/langchaingo/llms/bedrock"

)

func primary() {

ctx := context.Background()

llm, err := bedrock.New(bedrock.WithModel(bedrock.ModelAmazonTitanTextPremierV1))

if err != nil {

log.Deadly(err)

}

immediate := "What would be a good company name for a company that makes colorful socks?"

completion, err := llms.GenerateFromSinglePrompt(ctx, llm, immediate)

if err != nil {

log.Deadly(err)

}

fmt.Println(completion)

}

Since I had the modifications regionally, I pointed go.mod to the native model of langchaingo:

module demo

go 1.22.0

require github.com/tmc/langchaingo v0.1.12

change github.com/tmc/langchaingo v0.1.12 => /Customers/foobar/demo/langchaingoShifting on to one thing a bit extra concerned. Like LLM, langchaingo has a Doc loader element. I wished so as to add Amazon S3 — this manner anybody can simply incorporate information from S3 bucket of their purposes.

Amazon S3: Doc Loader Implementation

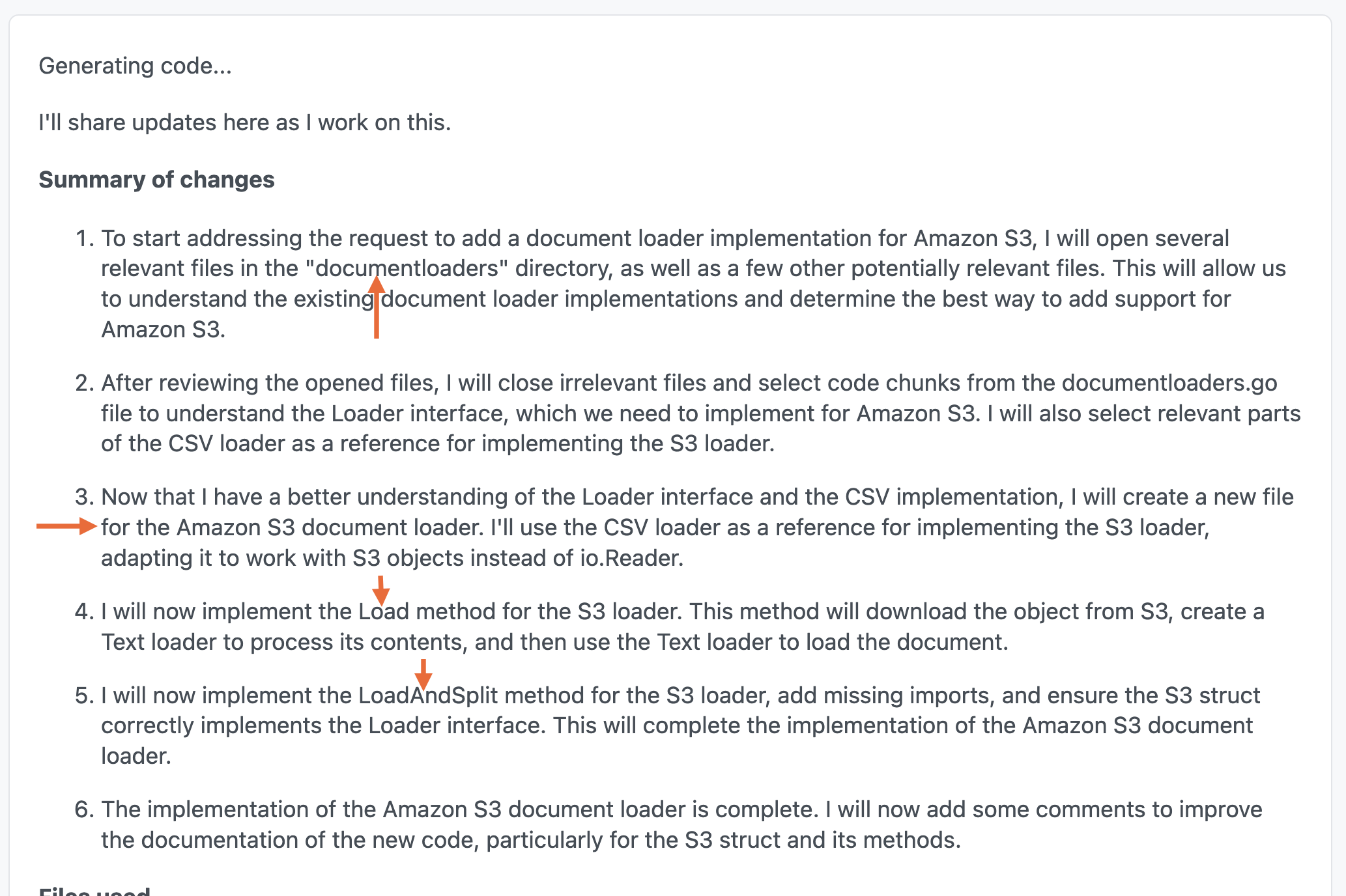

As ordinary, I began with a immediate: Add a doc loader implementation for Amazon S3.

Utilizing the Current Code Base

The abstract of modifications is actually attention-grabbing. Once more, Amazon Q Developer stored it is give attention to whats wanted to get the job performed. On this case, it appeared into the documentloaders listing to know current implementations and deliberate to implement Load and LoadAndSplit features — good!

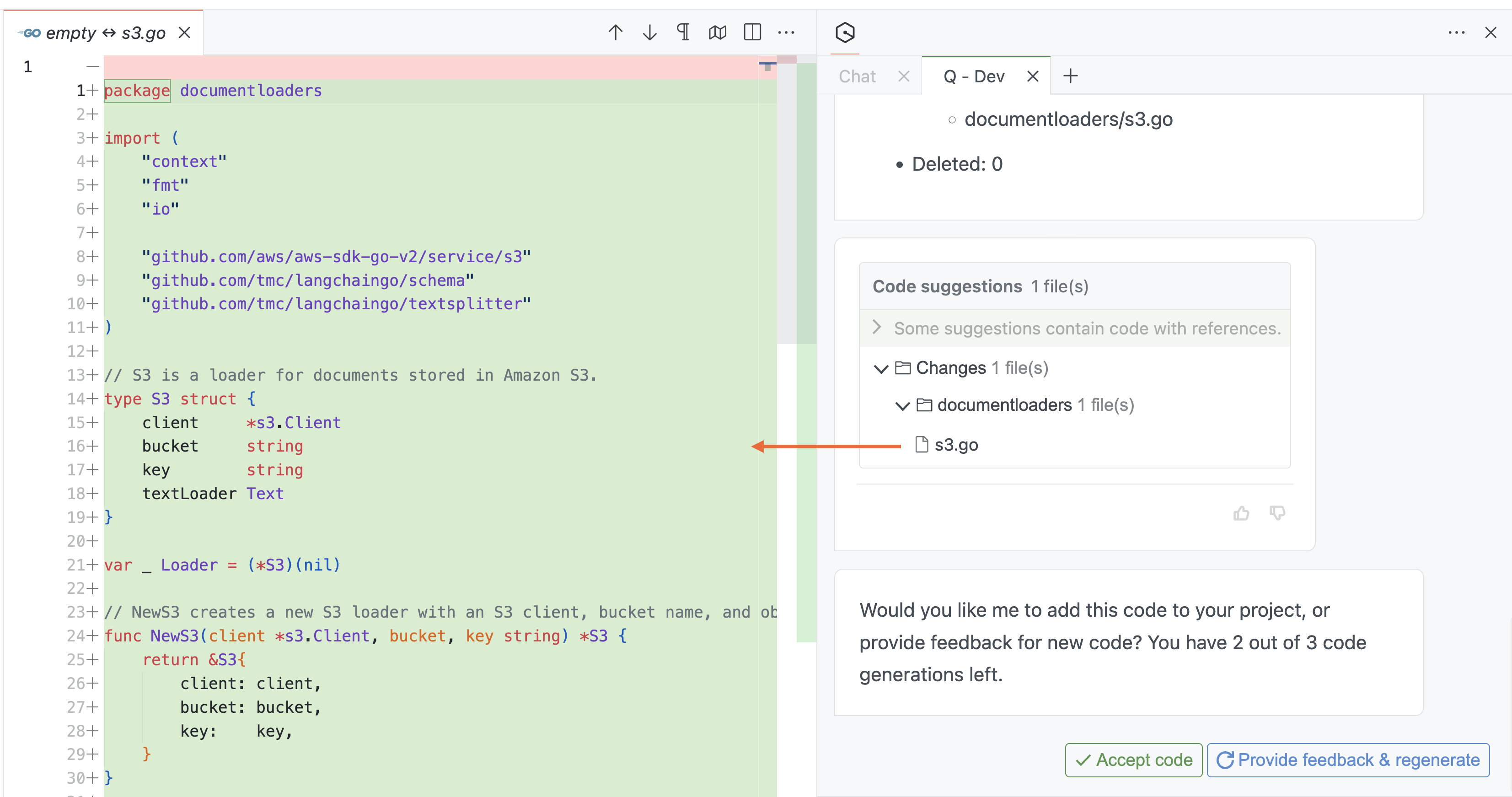

Code Recommendations, With Feedback for Readability

This offers you a transparent concept of the information that had been reviewed. Lastly, the entire logic was in (as anticipated) a file referred to as s3.go.

That is the steered code:

I made minor modifications to it after accepting it. Right here is the ultimate model:

Be aware that it solely takes textual content information under consideration (.txt file)

package deal documentloaders

import (

"context"

"fmt"

"github.com/aws/aws-sdk-go-v2/service/s3"

"github.com/tmc/langchaingo/schema"

"github.com/tmc/langchaingo/textsplitter"

)

// S3 is a loader for paperwork saved in Amazon S3.

kind S3 struct {

consumer *s3.Consumer

bucket string

key string

}

var _ Loader = (*S3)(nil)

// NewS3 creates a brand new S3 loader with an S3 consumer, bucket identify, and object key.

func NewS3(consumer *s3.Consumer, bucket, key string) *S3 {

return &S3{

consumer: consumer,

bucket: bucket,

key: key,

}

}

// Load retrieves the item from S3 and hundreds it as a doc.

func (s *S3) Load(ctx context.Context) ([]schema.Doc, error) {

// Get the item from S3

outcome, err := s.consumer.GetObject(ctx, &s3.GetObjectInput{

Bucket: &s.bucket,

Key: &s.key,

})

if err != nil {

return nil, fmt.Errorf("failed to get object from S3: %w", err)

}

defer outcome.Physique.Shut()

// Use the Textual content loader to load the doc

return NewText(outcome.Physique).Load(ctx)

}

// LoadAndSplit retrieves the item from S3, hundreds it as a doc, and splits it utilizing the supplied TextSplitter.

func (s *S3) LoadAndSplit(ctx context.Context, splitter textsplitter.TextSplitter) ([]schema.Doc, error) {

docs, err := s.Load(ctx)

if err != nil {

return nil, err

}

return textsplitter.SplitDocuments(splitter, docs)

}You may attempt it out from a consumer utility as such:

package deal primary

import (

"context"

"fmt"

"log"

"os"

"github.com/aws/aws-sdk-go-v2/config"

"github.com/aws/aws-sdk-go-v2/service/s3"

"github.com/tmc/langchaingo/documentloaders"

"github.com/tmc/langchaingo/textsplitter"

)

func primary() {

cfg, err := config.LoadDefaultConfig(context.Background(), config.WithRegion(os.Getenv("AWS_REGION")))

if err != nil {

log.Deadly(err)

}

consumer := s3.NewFromConfig(cfg)

s3Loader := documentloaders.NewS3(consumer, "test-bucket", "demo.txt")

docs, err := s3Loader.LoadAndSplit(context.Background(), textsplitter.NewRecursiveCharacter())

if err != nil {

log.Deadly(err)

}

for _, doc := vary docs {

fmt.Println(doc.PageContent)

}

}Wrap Up

These had been only a few examples. These enhanced capabilities for autonomous reasoning permits Amazon Q Developer to sort out difficult duties. I really like the way it iterates on the issue, tries a number of approaches because it goes, and like a retains you up to date about its thought course of.

It is a good match for producing code, debugging issues, bettering documentation, and extra. What is going to you utilize it for?