Postgres continues to evolve the database panorama past conventional relational database use instances. Its wealthy ecosystem of extensions and derived options has made Postgres a formidable drive, particularly in areas corresponding to time-series and geospatial, and most just lately, gen(erative) AI workloads.

Pgvector has turn out to be a foundational extension for gen AI apps that need to use Postgres as a vector database. In short, pgvector provides a brand new information kind, operators, and index varieties to work with vectorized information (embeddings) in Postgres. This lets you use the database for similarity searches over embeddings.

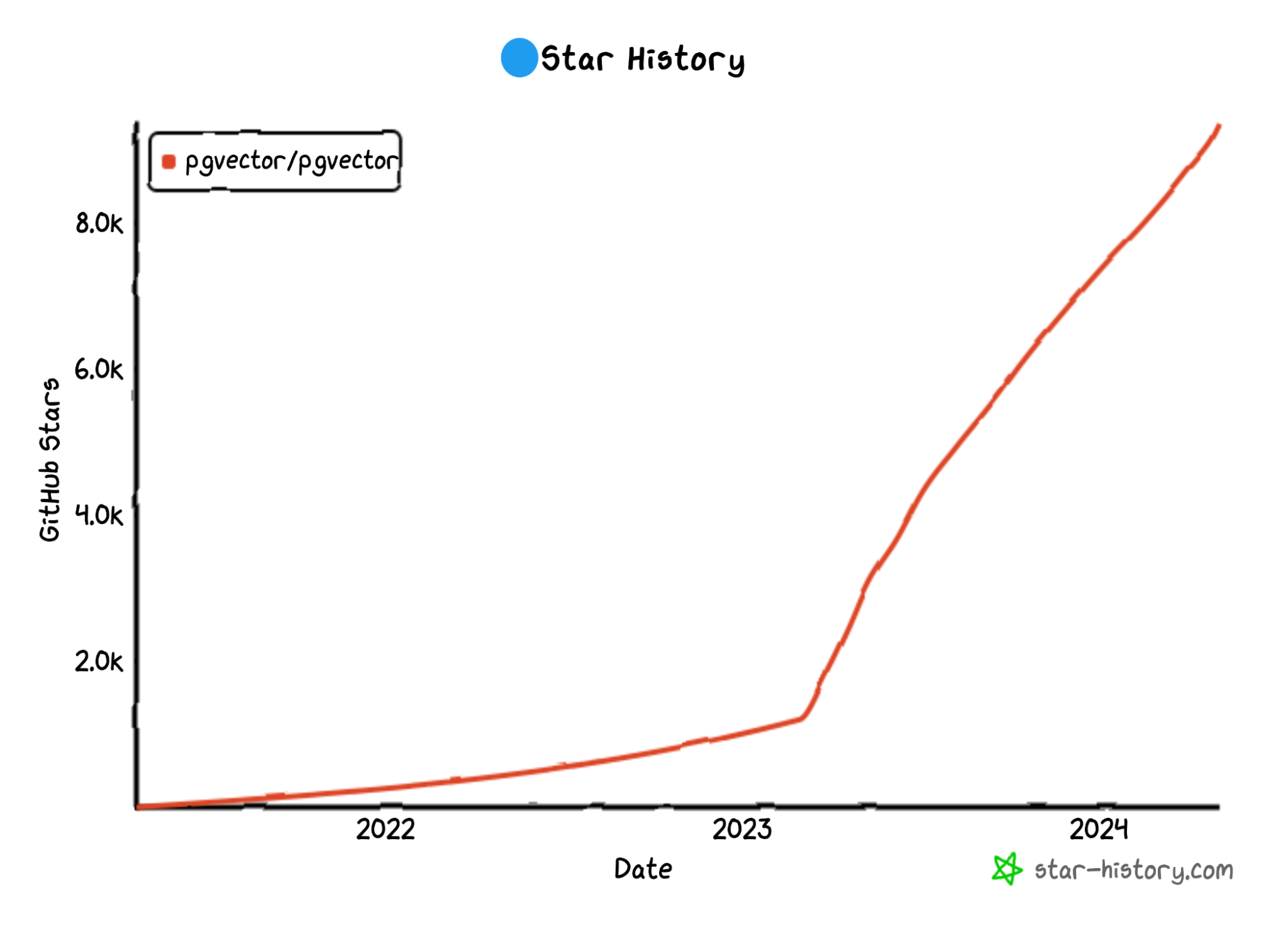

Pgvector began to take off in 2023, with rocketing GitHub stars:

Pure vector databases, corresponding to Pinecone, needed to acknowledge the existence of pgvector and begin publishing aggressive supplies. I take into account this a very good signal for Postgres.

Why a very good signal? Nicely, as my fellow Postgres neighborhood member Rob Deal with put it, “First they ignore you. Then they laugh at you. Then they create benchmarketing. Then you win!”

So, how is that this associated to the subject of distributed Postgres?

The extra typically Postgres is used for gen AI workloads, the extra regularly you’ll hear (from distributors behind different options) that gen AI apps constructed on Postgres may have:

- Scalability and efficiency points

- Challenges with information privateness

- A tough time with excessive availability

For those who do encounter the listed points, you shouldn’t instantly dump Postgres and migrate to a extra scalable, extremely out there, safe vector database, no less than not till you’ve tried operating Postgres in a distributed configuration!

Let’s talk about when and the way you should utilize distributed Postgres for gen AI workloads.

What Is Distributed Postgres?

Postgres was designed for single-server deployments. Which means a single major occasion shops a constant copy of all utility information and handles each learn and write requests.

How do you make a single-server database distributed? You faucet into the Postgres ecosystem!

Throughout the Postgres ecosystem, individuals normally assume one of many following of distributed Postgres:

- A number of standalone PostgreSQL cases with multi-master asynchronous replication and battle decision (like EDB Postgres Distributed)

- Sharded Postgres with a coordinator (like CitusData)

- Shared-nothing distributed Postgres (like YugabyteDB)

Try the next information for extra info on every deployment possibility. As for this text, let’s study when and the way distributed Postgres can be utilized in your gen AI workloads.

Downside #1: Embeddings Use All Accessible Reminiscence and Storage Area

When you have ever used an embedding mannequin that interprets textual content, photos, or different sorts of information right into a vectorized illustration, you may need observed that the generated embeddings are fairly massive arrays of floating level numbers.

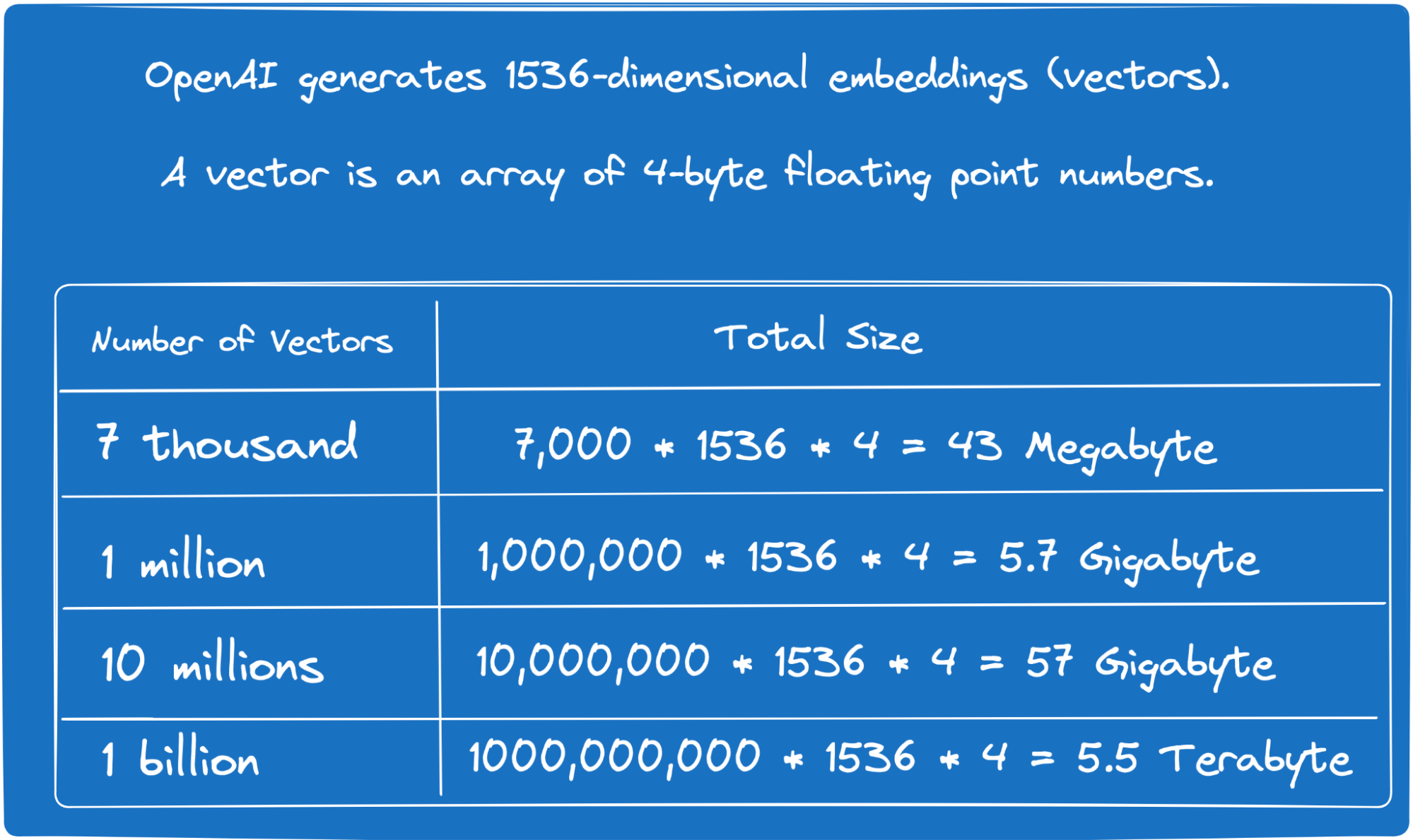

As an illustration, you would possibly use an OpenAI embedding mannequin that interprets a textual content worth right into a 1536-dimensional array of floating level numbers. Given that every merchandise within the array is a 4-byte floating level quantity, the dimensions of a single embedding is roughly 6KB — a considerable quantity of information.

Now, in case you have 10 million data, you would want to allocate roughly 57 gigabytes of storage and reminiscence only for these embeddings. Moreover, it is advisable take into account the house taken by indexes (corresponding to HNSW, IVFFlat, and so forth.), which lots of you’ll create to expedite the vector similarity search.

Total, the larger the variety of embeddings, the extra reminiscence and space for storing Postgres would require to retailer and handle them effectively.

You’ll be able to scale back storage and reminiscence utilization by switching to an embedding mannequin that generates vectors with fewer dimensions, or by utilizing quantization methods. However, suppose I would like these 1536-dimensional vectors and I don’t need to apply any quantization methods. In that case, if the variety of embeddings continues to extend, I might outgrow the reminiscence and storage capability of my database occasion.

That is an apparent space the place you’ll be able to faucet into distributed Postgres. For instance, by operating sharded (CitusData) or shared-nothing (YugabyteDB) variations of PostgreSQL, you’ll be able to enable the database to distribute your embeddings evenly throughout a complete cluster of nodes.

Utilizing this method, you’re now not restricted by the reminiscence and storage capacities of a single node. In case your utility continues to generate extra embeddings, you’ll be able to all the time scale out the cluster by including extra nodes.

Downside #2: Similarity Search Is a Compute-Intensive Operation

This drawback is carefully associated to the earlier one however with a concentrate on CPU and GPU utilization.

After we say “just perform the vector similarity search over the embeddings stored in our database,” the duty sounds simple and apparent to us people. Nonetheless, from the database server’s perspective, it is a compute-intensive operation requiring important CPU cycles.

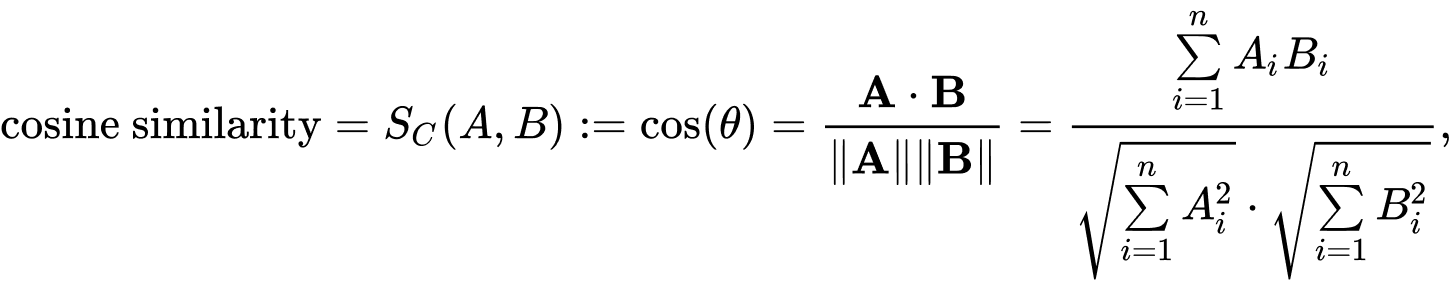

As an illustration, right here is the formulation used to calculate the cosine similarity between two vectors. We usually use cosine similarity to seek out probably the most related information for a given person immediate.

Think about A as a vector or embedding of a newly supplied person immediate, and B as a vector or embedding of your distinctive enterprise information saved in Postgres. For those who’re in healthcare, B may very well be a vectorized illustration of a drugs and remedy of a particular illness.

To seek out probably the most related remedy (vector B) for the supplied person signs (vector A), the database should calculate the dot product and magnitude for every mixture of A and B. This course of is repeated for each dimension (the ‘i’ within the formulation) within the in contrast embeddings. In case your database incorporates one million 1536-dimensional vectors (therapies and medicines), Postgres should carry out one million calculations over these multi-dimensional vectors for every person immediate.

Approximate nearest neighbor search (ANN) permits us to cut back CPU and GPU utilization by creating specialised indexes for vectorized information. Nonetheless, with ANN, you sacrifice some accuracy; you may not all the time obtain probably the most related therapies or medicines for the reason that database doesn’t examine all of the vectors. Moreover, these indexes include a price: they take time to construct and preserve, they usually require devoted reminiscence and storage.

For those who do not need to be certain by the CPU and GPU sources of a single database server, you’ll be able to think about using a distributed model of Postgres. Each time compute sources turn out to be a bottleneck, you’ll be able to scale your database cluster out and up by including new nodes. As soon as a brand new node joins the cluster, a distributed database like YugabyteDB will robotically rebalance the embeddings and instantly start using the brand new node’s sources.

Downside #3: Knowledge Privateness

Each time I display what a mixture of LLM and Postgres can obtain, builders are impressed. They instantly attempt to match and apply these AI capabilities to the apps they work on.

Nonetheless, there may be all the time a follow-up query associated to information privateness: ‘How can I leverage an LLM and embedding mannequin with out compromising information privateness?’ The reply is twofold.

First, if you happen to don’t belief a selected LLM or embedding mannequin supplier, you’ll be able to select to make use of personal or open-source fashions. As an illustration, use Mistral, LLaMA, or different fashions from Hugging Face that you may set up and run from your individual information facilities or cloud environments.

Second, some functions must adjust to information residency necessities to make sure that all information used or generated by the personal LLM and embedding mannequin by no means leaves a particular location (information heart, cloud area, or zone).

On this case, you’ll be able to run a number of standalone Postgres cases, every working with information from a particular location, and permit the applying layer to orchestrate entry throughout a number of database servers.

Another choice is to make use of the geo-partitioning capabilities of distributed Postgres deployments, which automate information distribution and entry throughout a number of areas, simplifying utility logic.

Let’s proceed with the healthcare use case to see how geo-partitioning permits us to distribute details about medicines and coverings throughout areas required by information regulators. Right here I’ve used YugabyteDB for example of a distributed Postgres deployment.

Think about three hospitals, one in San Francisco, and the others in Chicago and New York. We deploy a single distributed YugabyteDB cluster, with a number of nodes in areas (or personal information facilities) close to every hospital’s location.

To adjust to information privateness and regulatory necessities, we should be certain that the medical information from these hospitals by no means leaves their respective information facilities.

With geo-partitioning, we will obtain this as follows:

- Create Postgres tablespaces mapping them to cloud areas within the US West, Central, and East. There’s no less than one YugabyteDB node in each area.

CREATE TABLESPACE usa_east_ts WITH (

replica_placement="{"num_replicas": 1, "placement_blocks":

[{"cloud":"gcp","region":"us-east4","zone":"us-east4-a","min_num_replicas":1}]}"

);

CREATE TABLESPACE usa_central_ts WITH (

replica_placement="{"num_replicas": 1, "placement_blocks":

[{"cloud":"gcp","region":"us-central1","zone":"us-central1-a","min_num_replicas":1}]}"

);

CREATE TABLESPACE usa_west_ts WITH (

replica_placement="{"num_replicas": 1, "placement_blocks":

[{"cloud":"gcp","region":"us-west1","zone":"us-west1-a","min_num_replicas":1}]}"

);

- Create a remedy desk that retains details about therapies and medicines. Every remedy has an related multi-dimensional vector –

description_vector– that’s generated for the remedy’s description with an embedding mannequin. Lastly, the desk is partitioned by thehospital_locationcolumn.

CREATE TABLE remedy (

id int,

title textual content,

description textual content,

description_vector vector(1536),

hospital_location textual content NOT NULL

)

PARTITION BY LIST (hospital_location);

- The partitions’ definition is as follows. As an illustration, the info of the

hospital3, which is in San Francisco, will likely be robotically mapped to theusa_west_tswhose information belongs to the database nodes within the US West.

CREATE TABLE treatments_hospital1 PARTITION OF remedy(id, title, description, description_vector, PRIMARY KEY (id, hospital_location))

FOR VALUES IN ('New York') TABLESPACE usa_east_ts;

CREATE TABLE treatments_hospital2 PARTITION OF remedy(id, title, description, description_vector, PRIMARY KEY (id, hospital_location))

FOR VALUES IN ('Chicago') TABLESPACE usa_central_ts;

CREATE TABLE treatments_hospital3 PARTITION OF remedy(id, title, description, description_vector, PRIMARY KEY (id, hospital_location))

FOR VALUES IN ('San Francisco') TABLESPACE usa_west_ts;

As soon as you’ve got deployed a geo-partitioned database cluster and outlined the required tablespaces with partitions, let the applying connect with it and permit the LLM to entry the info. As an illustration, the LLM can question the remedy desk straight utilizing:

choose title, description from remedy the place

1 - (description_vector ⇔ $user_prompt_vector) > 0.8

and hospital_location = $locationThe distributed Postgres database will robotically route the request to the node that shops information for the desired hospital_location. The identical applies to INSERTs and UPDATEs; adjustments to the remedy desk will all the time be saved within the partition->tablespace->nodes belonging to that hospital’s location. These adjustments won’t ever be replicated to different areas.

Downside #4: Excessive Availability

Though Postgres was designed to perform in a single-server configuration, this does not imply it may possibly’t be run in a extremely out there setup. Relying in your desired restoration level goal (RPO) and restoration time goal (RTO), there are a number of choices.

So, how is distributed Postgres helpful? With distributed PostgreSQL, your gen AI apps can stay operational even throughout zone, information heart, or regional outages.

As an illustration, with YugabyteDB, you merely deploy a multi-node distributed Postgres cluster and let the nodes deal with fault tolerance and excessive availability. The nodes talk straight. If one node fails, the others will detect the outage. For the reason that remaining nodes have redundant, constant copies of information, they’ll instantly begin processing utility requests that had been beforehand despatched to the failed node. YugabyteDB supplies RPO = 0 (no information loss) and RTO throughout the vary of 3-15 seconds (relying on the database and TCP/IP configuration defaults).

On this method, you’ll be able to construct gen AI apps and autonomous brokers that by no means fail, even throughout region-level incidents and different catastrophic occasions.

Abstract

Because of extensions like pgvector, PostgreSQL has advanced past conventional relational database use instances and is now a powerful contender for generative AI functions. Nonetheless, working with embeddings would possibly pose some challenges, together with important reminiscence and storage consumption, compute-intensive similarity searches, information privateness issues, and the necessity for prime availability.

Distributed PostgreSQL deployments provide scalability, load balancing, and geo-partitioning, making certain information residency compliance and uninterrupted operations. By leveraging these distributed methods, you’ll be able to construct scalable gen AI functions that scale and by no means fail.