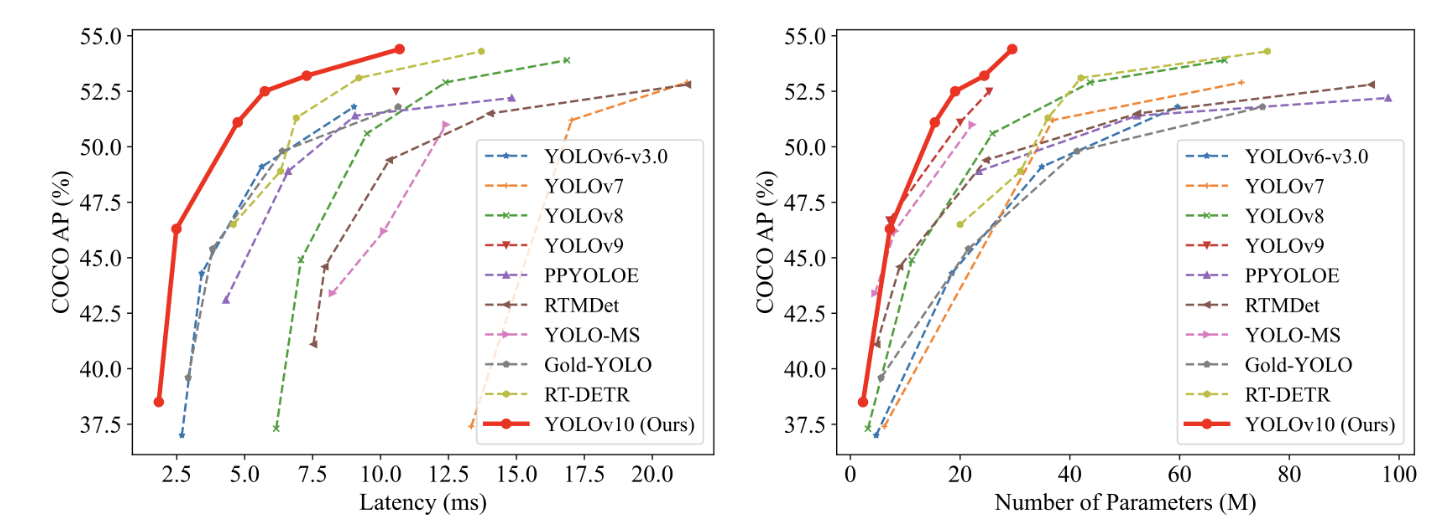

YOLOv10 (You Solely Look As soon as v10), launched by Tsinghua College on Could 23, presents a big enchancment over YOLOv9. It achieves a 46% discount in latency and makes use of 25% fewer parameters, all whereas delivering the identical stage of efficiency.

2. YOLOv10 Visible Object Detection: Overview

2.1 What Is YOLO?

YOLO (You Solely Look As soon as) is an object detection algorithm based mostly on deep neural networks, designed to determine and find a number of objects in pictures or movies in actual time. YOLO is famend for its quick processing pace and excessive accuracy, making it excellent for purposes that require fast object detection, equivalent to real-time video evaluation, autonomous driving, and sensible healthcare.

Earlier than YOLO, the dominant algorithm was R-CNN, a “two-stage” strategy: first, producing anchor containers after which predicting the objects inside these containers. YOLO revolutionized this by permitting “one-stage” direct, end-to-end output of objects and their areas.

- One-stage algorithms: These fashions carry out direct regression duties to output object possibilities and their coordinates. Examples embody SSD, YOLO, and MTCNN.

- Two-stage algorithms: These first generate a number of anchor containers after which use convolutional neural networks to output the chance and coordinates of objects inside these containers. Examples embody the R-CNN collection.

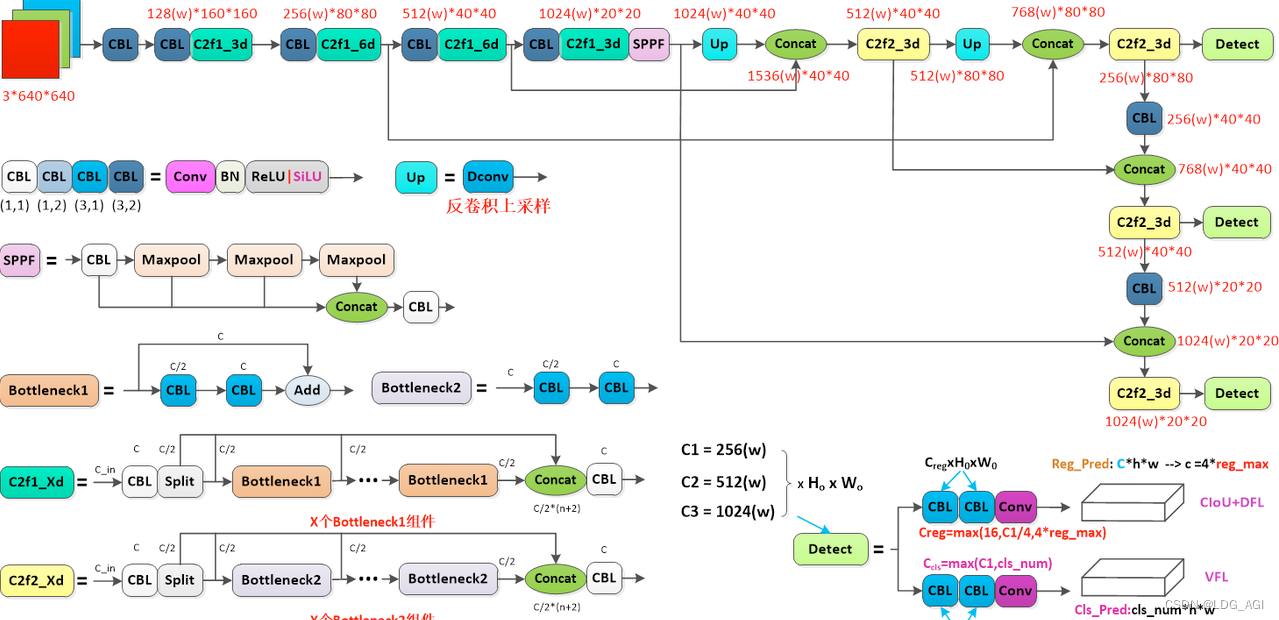

2.2 YOLO’s Community Construction

YOLOv10 is an enhancement of YOLOv8. Let’s take a short take a look at the community construction of YOLOv8:

3. YOLOv10 Visible Object Detection: Coaching and Inference

3.1 Putting in YOLOv10

3.1.1 Clone the Repository

Begin by cloning the YOLOv10 repository from GitHub:

git clone https://github.com/THU-MIG/yolov10.git3.1.2 Create a Conda Surroundings

Subsequent, create a brand new Conda setting particularly for YOLOv10 and activate it:

conda create -n yolov10 python=3.10

conda activate yolov103.1.3 Obtain and Compile Dependencies

To put in the required dependencies, it is advisable to make use of the Tencent pip mirror for quicker downloads:

pip set up -r necessities.txt -i https://mirrors.cloud.tencent.com/pypi/easy

pip set up -e . -i https://mirrors.cloud.tencent.com/pypi/easy3.2 Mannequin Inference With YOLOv10

3.2.1 Mannequin Obtain

To get began with YOLOv10, you’ll be able to obtain the pre-trained fashions utilizing the next hyperlinks:

3.2.2 WebUI Inference

To carry out inference utilizing the WebUI, observe these steps:

-

Navigate to the basis listing of the YOLOv10 challenge. Run the next command to begin the applying:

-

As soon as the server begins efficiently, you will notice a message indicating that the applying is operating and prepared to be used.

3.2.3 Command Line Inference

For command line inference, you need to use the Yolo command inside your Conda setting. This is how you can arrange and execute it:

Activate the YOLOv10 Conda setting: Guarantee you may have activated the setting you created earlier for YOLOv10.

Run inference utilizing the command line: Use the yolo predict command to carry out predictions. It’s good to specify the mannequin, machine, and supply picture path as follows:

yolo predict mannequin=yolov10n.pt machine=2 supply=/aigc_dev/yolov10/ultralytics/propertymannequin: Specifies the trail to the downloaded mannequin file (e.g., yolov10n.pt).machine: Specifies which GPU to make use of (e.g., machine=2 for GPU #2).supply: Specifies the trail to the photographs you need to detect objects in.

Default paths and outcomes:

- By default, the photographs to be detected ought to be positioned within the yolov10/ultralytics/property listing.

- After detection, the outcomes might be saved in a listing named yolov10/runs/detect/predictxx, the place xx represents a singular identifier for every run.

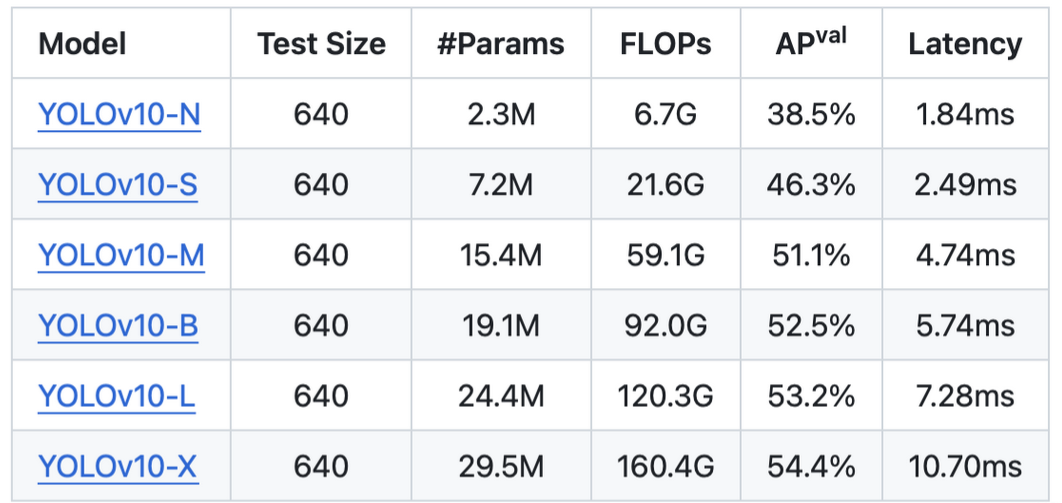

Benchmark on CoCo dataset.

3.3 Coaching the YOLOv10 Mannequin

Along with inference, YOLOv10 additionally helps coaching on customized datasets. This is how one can prepare the mannequin utilizing the command line:

To provoke coaching with YOLOv10, use the next command:

yolo detect prepare information=coco.yaml mannequin=yolov10s.yaml epochs=100 batch=128 imgsz=640 machine=2This is a breakdown of the command choices:

detect prepare: This specifies that you simply need to carry out coaching for object detection.information=coco.yaml: Specifies the dataset configuration file. The default dataset (COCO) is downloaded and saved within the../datasets/cocolisting.mannequin=yolov10s.yaml: Specifies the configuration file for the mannequin you need to prepare.epochs=100: Units the variety of coaching iterations (epochs).batch=128: Specifies the batch dimension for coaching, i.e., the variety of pictures processed in every coaching step.imgsz=640: Signifies the picture dimension to which all enter pictures might be resized throughout coaching.machine=2: Specifies which GPU to make use of for coaching (e.g.,machine=2for GPU #2).

Instance Clarification

Assuming you may have arrange the YOLOv10 setting and dataset correctly, operating the above command will begin the coaching course of on the desired GPU. The mannequin might be educated for 100 epochs with a batch dimension of 128, and the enter pictures might be resized to 640×640 pixels.

Steps To Prepare YOLOv10

Put together Your Dataset

- Guarantee your dataset is correctly formatted and described within the coco.yaml file (or your individual customized dataset configuration file).

- The dataset configuration file consists of paths to your coaching and validation information, in addition to the variety of lessons.

Configure the Mannequin

- The mannequin configuration file (e.g., yolov10s.yaml) incorporates settings particular to the YOLOv10 variant you’re coaching, together with the structure and preliminary weights.

Run the Coaching Command

- Use the command offered above to begin the coaching course of. Alter parameters like epochs, batch, imgsz, and machine based mostly in your {hardware} capabilities and coaching necessities.

Monitor and Consider

- Throughout coaching, monitor the progress by way of logs or a visible instrument if obtainable.

- After coaching, consider the mannequin efficiency on a validation set to make sure it meets your expectations.

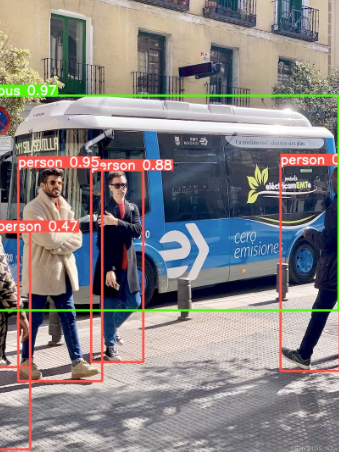

A demo case of utilizing Yolo10 for real-time on-line object detection:

import cv2

from ultralytics import YOLOv10

mannequin = YOLOv10("yolov10s.pt")

cap = cv2.VideoCapture(0)

whereas True:

ret, body = cap.learn()

if not ret:

break

outcomes = mannequin.predict(body)

for end in outcomes:

containers = consequence.containers

for field in containers:

x1,y1,x2,y2 = map(int, field.xyxy[0])

cls = int(field.cls[0])

conf = float(field.conf[0])

cv2.rectangle(body, (x1, y1), (x2, y2), (255, 0, 0), 2)

cv2.putText(body, f'{mannequin.names[cls]} {conf:.2f}', (x1, y1 - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 0, 0), 2)

cv2.imshow('YOLOv10', body)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.launch()

cv2.destroyAllWindows()

You possibly can construct your workstation to run/prepare an AI system. To economize, you may also discover low cost elements like GPUs on-line.