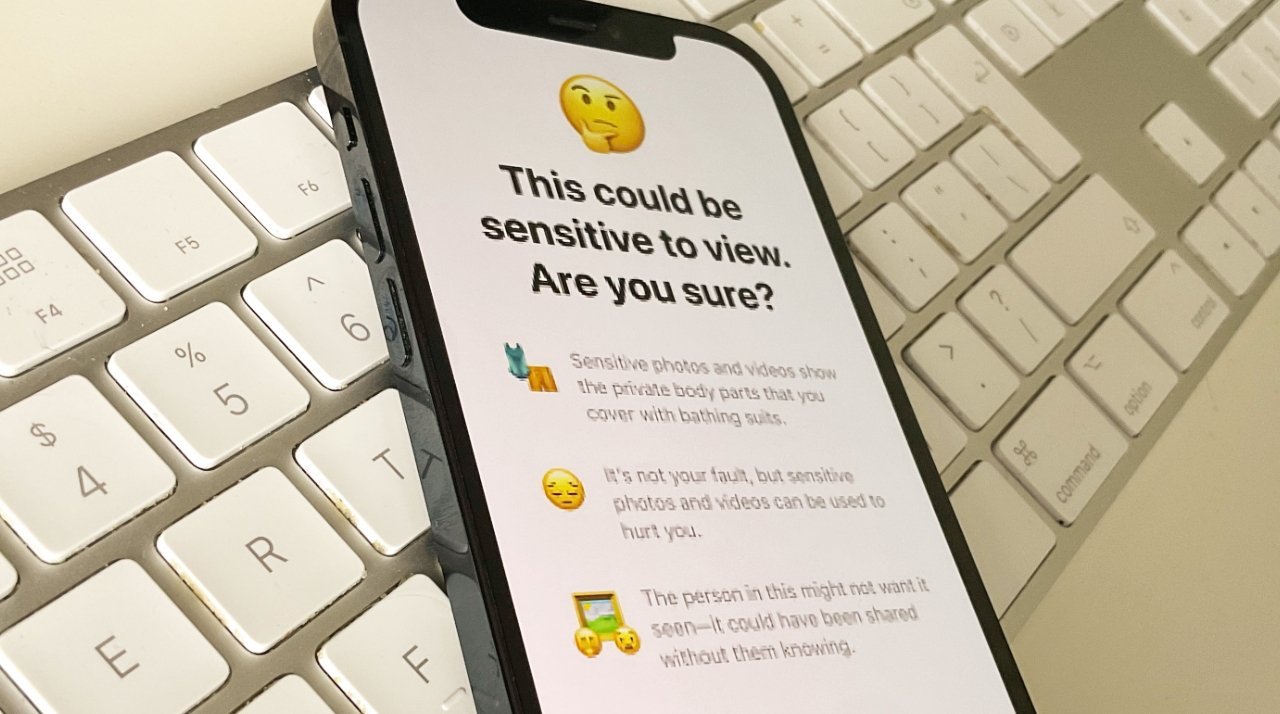

Apple cancelled its main CSAM proposals however launched options comparable to computerized blocking of nudity despatched to youngsters

A proposed class motion go well with claims that Apple is hiding behind claims of privateness to be able to keep away from stopping the storage of kid sexual abuse materials on iCloud, and alleged grooming over iMessage.

A brand new submitting with the US District Courtroom for the Northern District of California, has been introduced on behalf of an unnamed 9-year-old plaintiff. Listed solely as Jane Doe within the criticism, the submitting says that she was coerced into making and importing CSAM on her iPad.

“When 9-year-old Jane Doe received an iPad for Christmas,” says the submitting, “she never imagined perpetrators would use it to coerce her to produce and upload child sexual abuse material (“CSAM”) to iCloud.”

“This lawsuit alleges that Apple exploits ‘privacy’ at its own whim,” says the submitting, “at times emblazoning ‘privacy’ on city billboards and slick commercials and at other times using privacy as a justification to look the other way while Doe and other children’s privacy is utterly trampled on through the proliferation of CSAM on Apple’s iCloud.”